Earthquakes in the Landscape of Neural Network

Introduction

Artificial neural networks have been proven to be a very powerful tool for solving a wide variety of problems. Large number of researchers and engineers put their time and effort to develop the field, although there is still a lot to discover. And because of that, field evolves so quickly that large number of architectures and techniques that were popular just a few years ago, now is a part of the history. But not everything gets outdated and certain techniques prove to be useful to such an extent that it’s hard to imagine deep learning without them.

In this article, I want to direct your attention to the less known properties of one, quite famous, technique in the deep learning. I want to show you how beautiful and interesting could be a concept that typically left behind because of all more exciting ideas in this area.

What can you see?

Until now, I haven’t explained this mysterious “earthquake” phenomena and I would like to keep it like this for a little longer. I want to encourage you to focus on the properties of this phenomena and see whether you can recognize something that, most likely, is known to you.

As I mentioned earlier, certain clues could be revealed from the animation. In order to simplify things, we can focus our attention on a single frame and see what we can find.

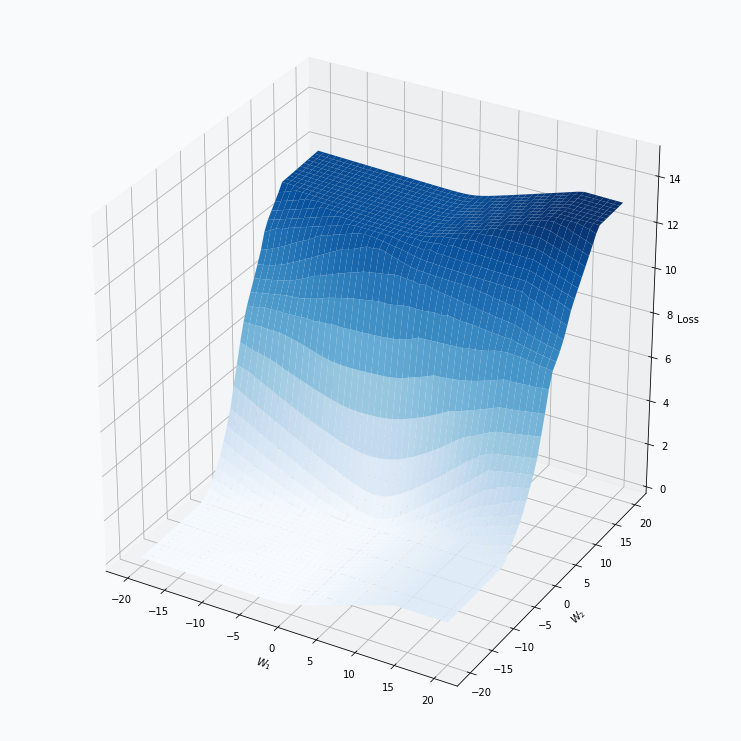

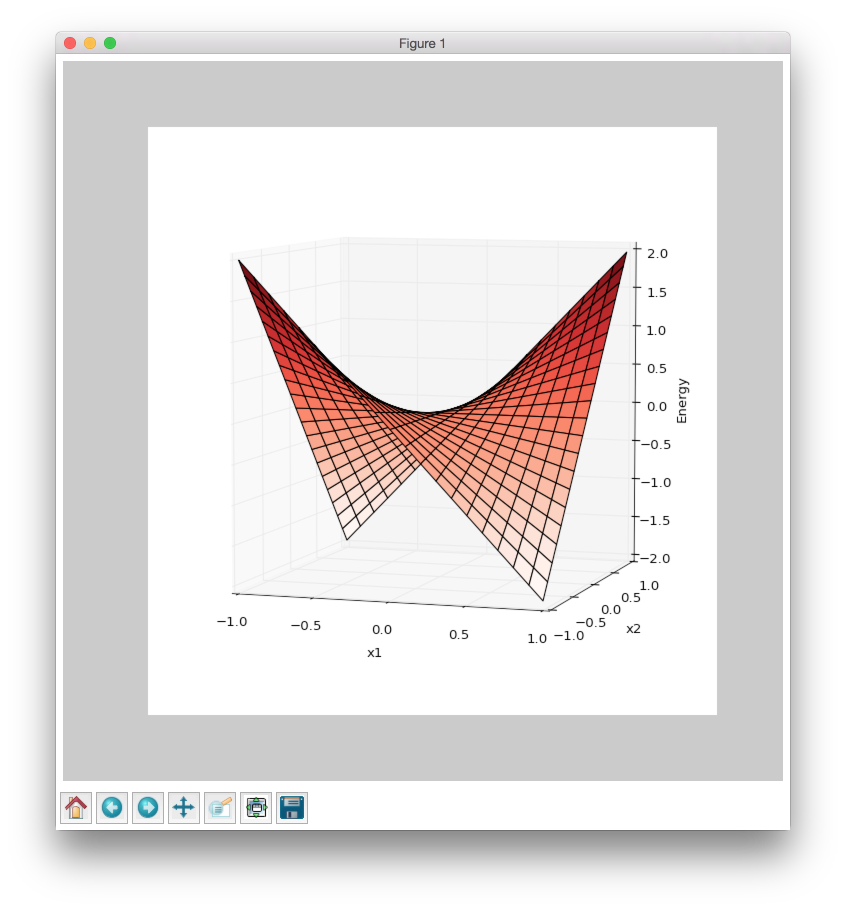

Some of you should be familiar with the graph like this. There we can see three axes, namely \(W_1\), \(W_2\) and Loss. Two of the axes specify different parameters/weights of the network, namely \(W_1\) and \(W_2\). The last axis shows a loss associated with each possible pair of parameters \(W_1\) and \(W_2\). That’s how neural network’s loss landscape looks like. Typically, during the training, we start with some fixed values \(W_1\), \(W_2\) and, with the help of an algorithm, like gradient descent, we navigate around landscape trying to find weights associated with lowest value on this surface. Assuming that this part is clear, one question still remains: Why does the loss landscape changes on the animation?

Let’s think what does this change represent. For each fixed pair of weights \(W_1\), \(W_2\) the loss value, associated with them, changes. And from this perspective it shouldn’t be that surprising, since we will never expect network to have exactly the same loss associated with a fixed set of weights. And at this point I would like to stop, since we’re getting pretty close to the answer.

I would encourage you to take a moment, think about what we’ve observed so far from the animation and guess what might produce this effect on the loss landscape. I suggest you to do it now, because next section contains an explanation of this phenomena.

Explanation

The animation shows how the neural network’s loss landscape looks like during the training with mini-batches. It’s quite common to think of a surface as a static landscape where we navigate during the training, trying to find weights that minimize desirable loss function. This statement might be considered as true only in case of the full-batch training, when all the available data samples are used at the same time during the training. But for most of the deep learning problems, that statement is false. During the training, we navigate in dynamically changing environment. For each training iteration, we take a set of input samples, propagate them thought the network and estimate gradient which we will use to adjust network’s weights.

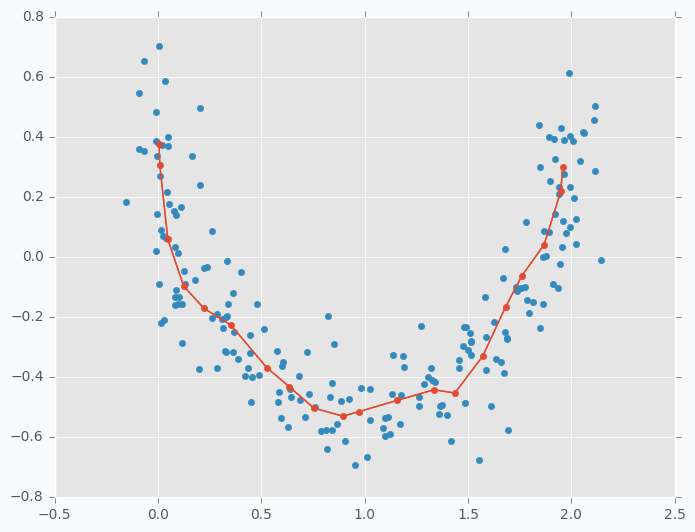

It might be easier to visualize training using contour plots. The same 3D animation could be represented in the following way:

Animation above shows only the way the loss landscape changes when we use different mini-batches, but it doesn’t show us its effect on the training. We can extend this animation with gradient vectors.

Animation above alternates between two steps, namely training iteration and mini-batch changing. Training iteration shows static landscape and vector shows how the weights have changed after the update. Next training iteration requires different mini-batch and this changeover is visualized by the smooth transition between two landscapes. In reality, this change is not smooth, but animation helps us to notice the effect of that change. The bigger the difference between two losses the more noticeable will be transition between two landscapes.

After completing multiple training iterations, we can see that the overall path looks a bit noisy. During each iteration gradient point towards the direction that minimizes the loss but continuous changes in the loss landscape makes it quite difficult to find a stable direction. This problem could be minimized with algorithms that accumulate information about a gradient over time. If you want to learn more about that you can check this article.

Mathematical perspective

Unlike in mathematical optimization, in neural networks, we don’t know what function we want to optimize. It sounds a bit strange, but that’s true. Each mini-batch creates a different loss landscape and during each iteration we optimize different function. With random shuffling and reasonably large mini-batch size, it’s quite unlikely that we will see exactly the same loss landscape twice during the training. It sounds even a bit surreal, if you think about it. We move towards the minimum of the function which we most likely won’t see ever again and somehow optimization algorithm arrives at a solution that works incredibly well for a target task, e.g. image classification.

Most of the loss functions used in deep learning calculate loss independently per each input sample and the overall loss will be just an average of these losses. Notice, that this property helps us to obtain gradient very easily, since the gradient of the average is the average of the gradients per each individual addend.

where, N is the number of samples in a mini-batch, E is a total loss, \(e_i\) is a loss associated with i-th input sample.

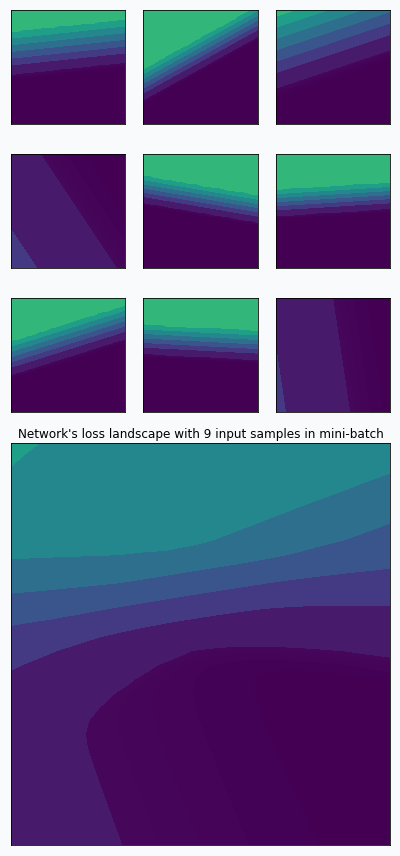

We can think that during the training we randomly sample loss landscapes and use average loss landscape in order to estimate the gradient. For example, we can take 9 random input samples and create loss landscape per each individual sample. By averaging these landscapes, we can obtain new landscape.

On the graph above, you can notice that 9 loss landscapes have linear contours and the averaged loss landscape doesn’t have this property. Each individual loss landscape has been obtained from a cross entropy loss function and simple logistic regression as a base network architecture. It could be easily shown that each individual loss is nearly piecewise linear function.

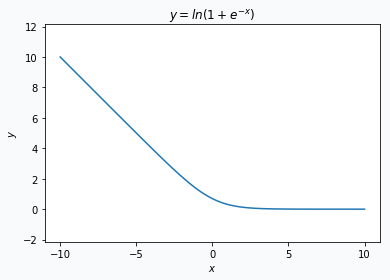

where \(x\) is an input vector, \(y \in \{0, 1\}\) is a target class and \(w\) is a vector with network’s parameters.

Obtained result could be separated into 2 parts. The first part is just a linear transformation of the input \(x\). The second part is a logarithm, but it behaves like a linear function when the absolute value of the \(x^T w\) is reasonably large.

Statistical perspective

We can also think about mini-batch training in terms of the loss landscape sampling. In statistics, random sampling helps us to derive properties of the entire population using summary statistics. For example, we might estimate expected value from the population by calculating average over a random sample. Obviously, the smaller sample we get, the less certain we’re about our estimation. And the same is true (or rather nearly true) for loss landscape sampling. This effect was demonstrated on the first animation:

I used mini-batch of size 10 for the graph on the left and size 100 for the graph on the right. We can see that “earthquake” effect is stronger for the left graph, where mini-batch size is smaller. Basically, estimation of the loss landscape, produced by small mini-batch, has quite large variance and it could be observed from the animation.

I want to make one disclaimer about sampling. It’s not quite the same as I’ve defined it for the statistical inference. Training dataset is already a sample from a population and each mini-batch is a sample from this sample. In addition to that, each mini-batch is not sampled independently. Typically, before each training epoch, we shuffle samples in our dataset and divide them into mini-batches. Propagation of the first mini-batch has an impact on the data distribution in the next mini-batch. For example, imagine simple problem where we try to classify 0 and 1 digits (binary classification on binary digits). In addition, we can imagine that in our training dataset, there are as many 0s as 1s. Imagine that in the first mini-batch we have more 1s than 0s. It means that in the rest of the training dataset we have more 0s than 1s and for the next mini-batch, we’re more likely to get more 0s than 1s, since probability was skewed by the first mini-batch. Whether it’s good, bad or shouldn’t matter, might be a separate topic for a different article, but the fact is that this sampling is not purely random.

Final words

In my opinion, term earthquake fits very naturally into a general intuition about neural network training. If you think about loss landscape as an area with mountains and valleys then earthquake represents shakes and displacement of that land. Magnitude of that earthquake could be measured with a size of the mini-batch. And navigation in this environment could be compared to the training with momentum.

Code

All the code, that has been used to generate graphs and animations for this article, could be found on Github.

Making Art with Growing Neural Gas

Introduction

I’ve been trying to make that type of art style for quite some time. I applied SOFM to the images, but in most cases it was unsuccessful, mostly because SOFM requires predefined size and structure of the network. With such a requirement it’s difficult to construct tool that converts image to nice art style. Later, I’ve learned more about Growing Neural Gas and it helped to resolve main issues with SOFM. In this article, I want to explain how this type of art style can be generated from the image. At the end, I will cover some of the similar, but less successful application with growing neural gas for image processing that I’ve been trying to develop.

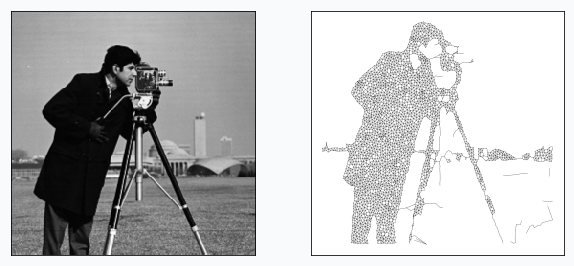

Image Processing Pipeline

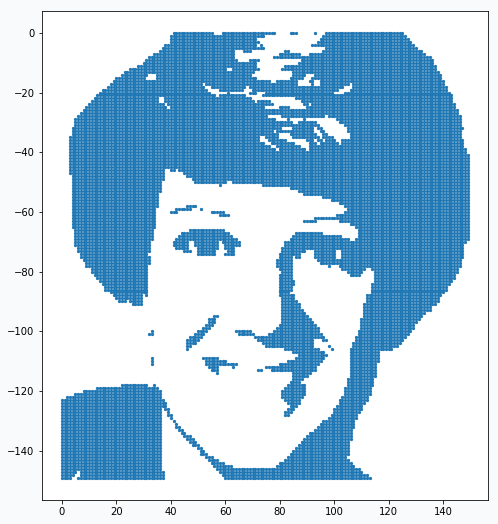

Images are not very natural data structure for most of the machine learning algorithms and Growing Neural Gas (GNG) is not an exception. For this reason, we need to represent input image in format that will be understandable for the network. The right format for the GNG would be set of data points. In addition, these data points have to somehow resemble original image. In order to do it, we can binarize our image and after that, every pixel on the image will be either black or white. Each black pixel we can use as a data point and pixel’s position as a feature. In this way, we would be able to extract topological structure of the image and store it as set of data points.

Conversion from the color image to binary image requires three simple image processing steps that we will apply in sequential way.

We need to load our image first

# skimage version 0.13.1 from skimage import data, img_as_float astro = img_as_float(data.astronaut()) astro = astro[30:180, 150:300]

Convert color image to grayscale

from skimage import color astro_grey = color.rgb2grey(astro)

Apply gaussian blurring. It will allow us to reduce image detalization.

from skimage.filters import gaussian blured_astro_grey = gaussian(astro_grey, sigma=0.6)

Find binarization threshold and convert to the black color every pixel that below this threshold.

from skimage.filters import threshold_otsu # Increase threshold in order to add more # details to the binarized image thresh = threshold_otsu(astro_grey) + 0.1 binary_astro = astro_grey < thresh

In some cases, it might be important to adjust threshold in order to be able to capture all important details. In this example, I added 0.1 to the threshold.

And finally, from the binary image it’s easy to make data points.

data = []

for (x, y), value in np.ndenumerate(binary_astro):

if value == 1:

data.append([y, -x])

plt.scatter(*np.array(data).T)

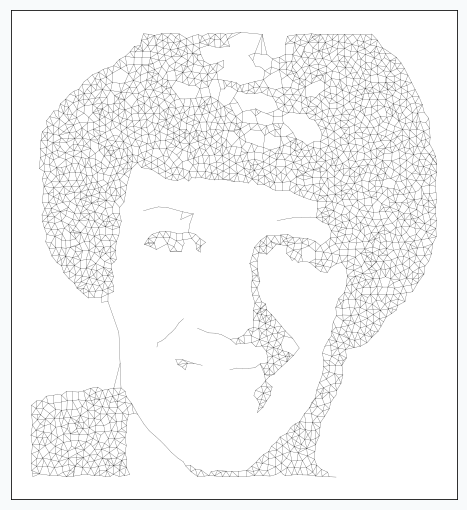

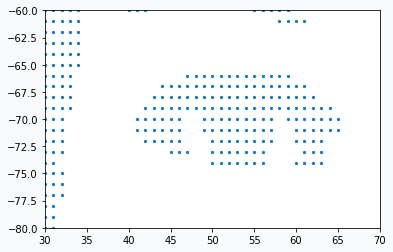

In the image there are so many data points that it’s not clear if it’s really just a set of data points. But if you zoom in you will see that they really are.

We prepared our data and now we need to learn a bit more about GNG network.

Growing Neural Gas

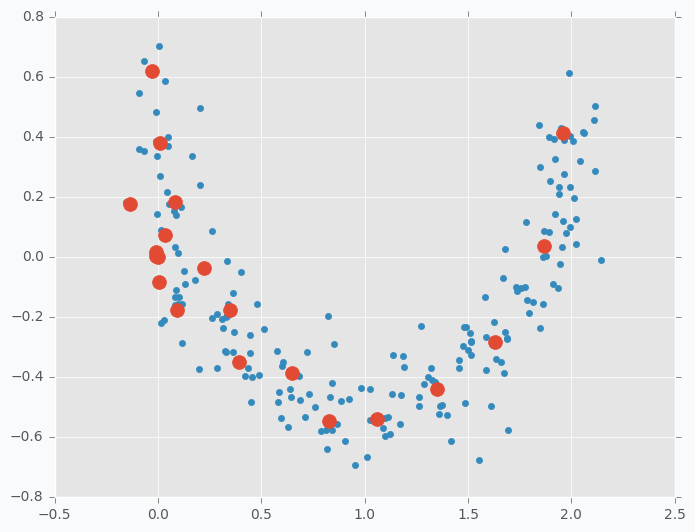

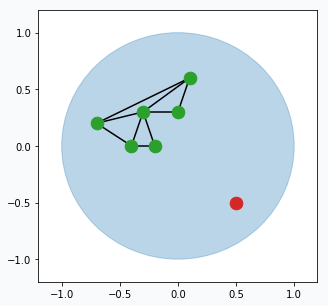

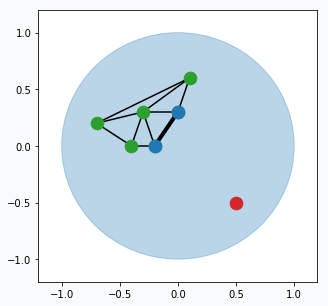

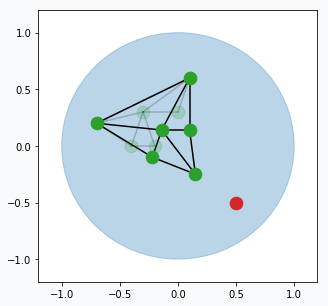

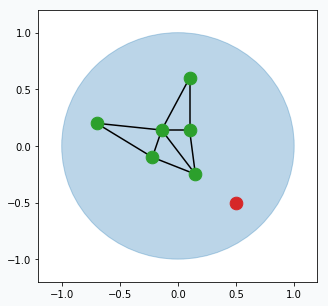

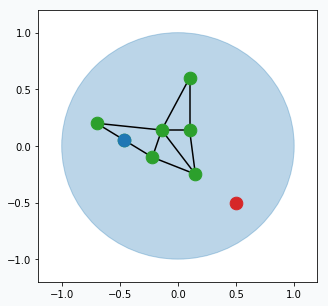

Growing Neural Gas is very simple algorithm and it’s really easy to visualize it. From the animation above you can see how it learns shape of the data. Network, typically, starts with two random points and expands over the space.

In the original paper [1], algorithm looks a bit complicated with all variables and terminology, but in reality it’s quite simple. Simplified version of the algorithm might look like this:

Pick one data point at random (red data point).

Blue region represents large set of data points that occupy space in the form of a unit circle. And green points connected with black lines is our GNG network. Green points are neurons and black line visualize connection between two neurons.

Find two closest neurons (blue data points) to the sampled data point and connect these neurons with an edge.

Move closest neuron towards the data point. In addition, you can move neurons, that connected by the edge with closest neuron, towards the same point.

Each neuron has error that accumulates over time. For every updated neuron we have to increase error. Increase per each neuron equal to the distance (euclidean) from this neuron to the sampled data point. The further the neuron from the data point the larger the error.

Remove edges that haven’t been updated for a while (maybe after 50, 100 or 200 iterations, up to you). In case if there are any neurons that doesn’t have edges then we can remove them too.

From time to time (maybe every 100 or 200 iterations) we can find neuron that has largest accumulated error. For this neuron we can find it’s neighbour with the highest accumulated error. In the middle way between them we can create new neuron (blue data point) that will be automatically connected to these two neurons and original edge between them will be destroyed.

You can think about this step in the following way. Find neuron that typically makes most errors and add one more neuron near it. This new neuron will help the other neuron to reduce accumulated error. Reduction in error will mean that we better capture structure of our data.

Repeat all the steps many times.

There are a few small extensions to the algorithm has to be added in order to be able to call it Growing Neural Gas, but the most important principles are there.

Putting Everything Together

And now we ready to combine power of the image processing pipeline with Growing Neural Gas.

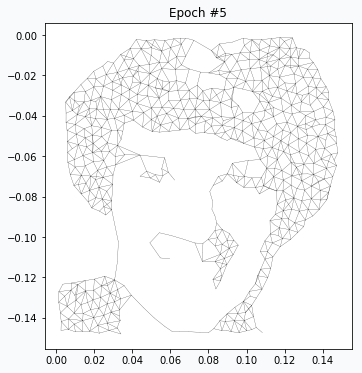

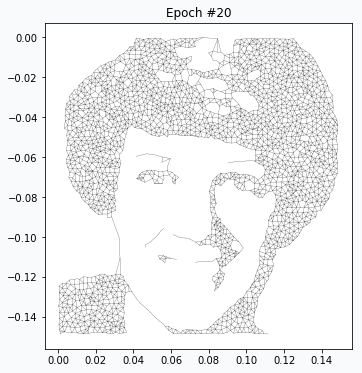

After running for one epoch we can already see some progress. Generated network resembles some distinctive features of our original image. At this point it’s pretty obvious that we don’t have enough neurons in the network in order to capture more details.

After 4 more iterations, image looks much closer to the original. You can notice that regions with large amount of data points have been developed properly, but small features like eyes, nose and mouth hasn’t been formed yet. We just have to wait more.

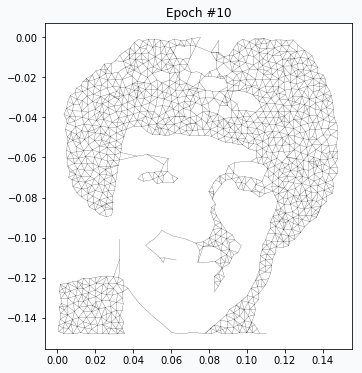

After 5 more iterations the eyebrows and eyes have better quality. Even hair has more complex shape.

On the 20th iteration network’s training has been stopped since we achieved desired quality of the image.

Reveal Issues with More Examples

I’ve been doing some experiments with other image as well, and there are a few problems that I’ve encountered.

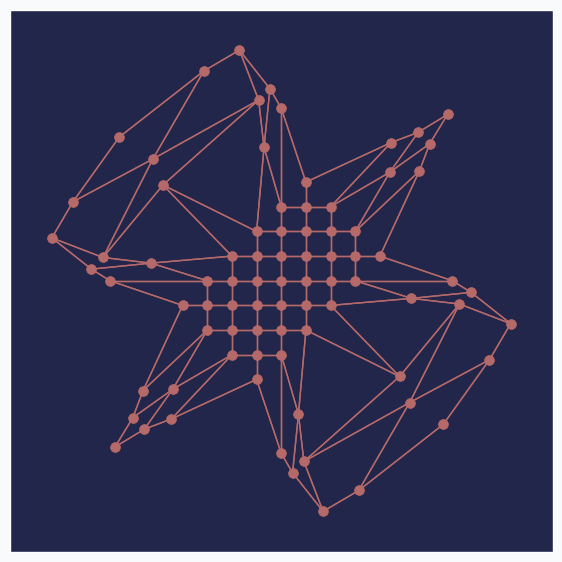

There are two main components in the art style generation procedure, namely: image processing pipeline and GNG. Let’s look at problem with GNG network. It can be illustrated with the following image.

If you compare horses you will notice that horse on the right image looks a bit skinnier than the left one. It happened, because neurons in the GNG network are not able to rich edges of the image. After one training pass over the full dataset each neuron is getting pulled from many directions and over the training process it settles somewhere in the middle, in order to be as close as possible to every sample that pulls it. The more neurons you add to the network the closer it will get to the edge.

Another problem related to the image binarization, the most difficult step in our image processing pipeline. It’s difficult, because each binarization method holds certain set of assumption that can easily fail for different images and there is no general way to do it. You don’t have such a difficulty with the network. It can give you pretty decent results for different images using the same configurations. The only thing that you typically need to control is the maximum number of neurons in the network. The more neuron you allow network to use the better quality of the image it produces.

In this article, I used global binarization method for image processing. This type of binarization generates single threshold for all pixels in the image, which can cause problems. Let’s look at the image below.

You can see that that there are some building in the background in the left image, but there is none in the right one. It’s hard to capture multiple object using single threshold, especially when they have different shades. For more complex cases you might try to use local thresholding methods.

Applying Similar Approach to Text

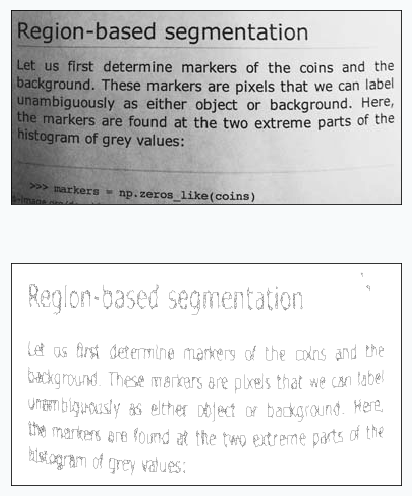

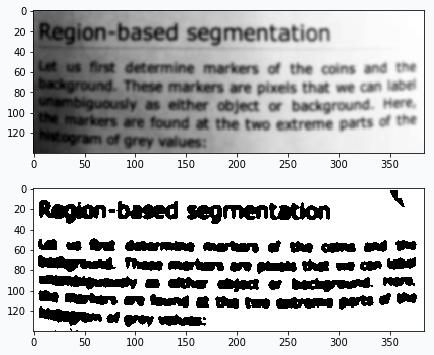

I’ve been also experimenting with text images. In the image below you can see the result.

It’s even possible to read text generated by the network. It’s also interesting that with slight modification to the algorithm you can count number of words in the image. We just need to add more blurring and after the training - count number of subgraphs in the network.

After many reruns I typically get number that very close to the right answer (44 words if you count “Region-based” as two words).

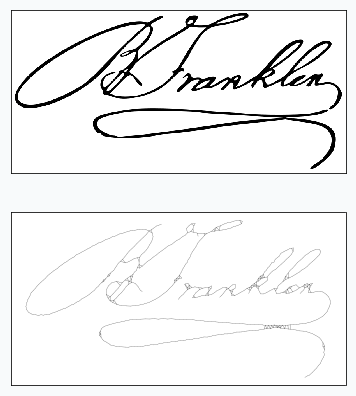

I also tried to train GNG network that captures trajectory of the signature. There are a few issues that I couldn’t overcome. In the image below you can clearly see some of these issues.

You will expect to see a signature as a continuous line and this property is hard to achieve using GNG. In the image above you can see a few places where network tries to cover some regions with small polygons and lines which looks very unnatural.

Final Words

Beautiful patterns generated from the images, probably, doesn’t reflect the real power of GNG network, but I think that the beauty behind algorithm shouldn’t be underappreciated only because it’s not useful for solving real world problems. There are not many machine learning algorithms that can be used for artistic application and it’s pretty cool when they work even though they weren’t designed for this purpose.

I had a lot of fun trying different ideas and I encourage you to try it as well. If you’re new to machine learning - it’s easy to start with GNG and if you’re an expert, I might try motivating you saying that it’s quite refreshing to work with neural networks that can be easily interpreted and analyzed.

Learn More

In case if you want to learn more about algorithms just like GNG then you can read about SOFM. As I said in the beginning of the article, it doesn’t work as nice as GNG for images, but you can write pretty cool text styles or generate beautiful patterns. And, it has some other interesting applications (even in deep learning).

Code

A few notebooks with code are available on github.

- Main notebook that generates all the images using GNG

- Growing Neural Gas animation notebook

- Notebook that generates step by step visualization images for the Growing Neural Gas algorithm

References

| [1] | A Growing Neural Gas Network Learns Topologies, Bernd Fritzke et al. https://papers.nips.cc/paper/893-a-growing-neural-gas-network-learns-topologies.pdf |

| [2] | Thresholding, tutorial from scikit-image library http://scikit-image.org/docs/dev/auto_examples/xx_applications/plot_thresholding.html |

| [3] | Thresholding (image processing), wikipedia article https://en.wikipedia.org/wiki/Thresholding_%28image_processing%29 |

Create unique text-style with SOFM

Introduction

In this article, I want to show how to generate unique text style using Self-Organizing Feature Maps (SOFM). I won’t explain how SOFM works in this article, but if you want to learn more about algorithm you can check these articles.

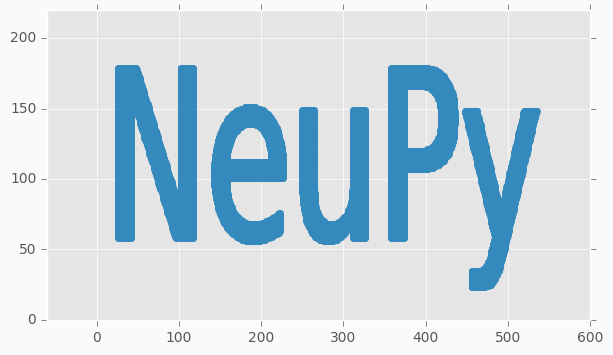

Transforming text into the data

In order to start, we need to have some text prepared. I generated image using matplotlib, but anything else that able to generate image from the text will work.

import matplotlib.pyplot as plt

red, blue, white = ('#E24A33', '#348ABD', '#FFFFFF')

ax = plt.gca()

ax.patch.set_facecolor(white)

ax.text(0, 0.25, 'NeuPy', fontsize=120)

plt.xticks([])

plt.yticks([])

plt.savefig('neupy-text.png', facecolor=white, bbox_inches='tight')

We cannot train SOFM using image, for this reason we will have to transform image into a set of data points. In order to do it we will encode every black pixel as data point and we will ignore white pixels. It’s hard to see from the picture, but not all pixels are black and white. If you zoom close enough you will see that there are some gray pixels near the edge of each letter. For this reason, we have to binarize our image first.

from scipy.misc import imread

neupy_text = imread('neupy-text.png')

# Encode black pixels as 1 and white pixels as 0

neupy_text = (1 - neupy_text / 255.).round().max(axis=2)

After binarization we have to filter all pixels that have value 1 and use pixel coordinates as a data point coordinates.

data = []

for (x, y), value in np.ndenumerate(neupy_text):

if value == 1:

data.append([y, -x + 300])

data = np.array(data)

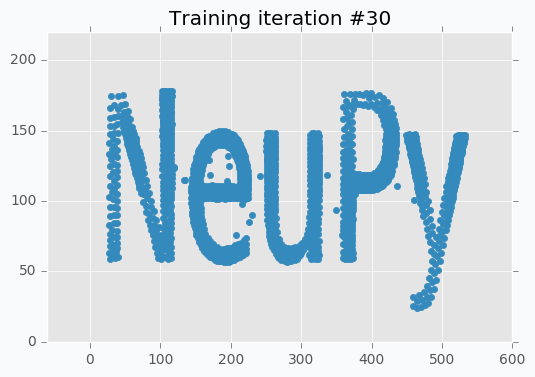

We can use scatter plot to show that collected data points still resemble the shape of the main text.

plt.scatter(*data.T, color=blue)

plt.show()

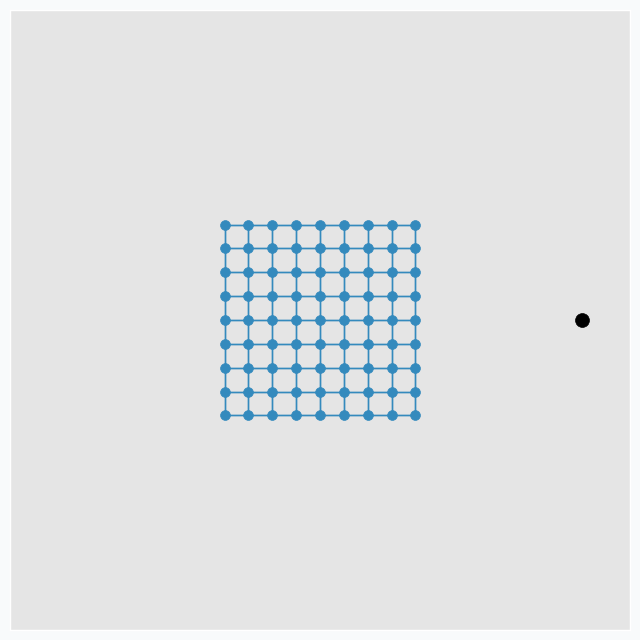

Weight initialization

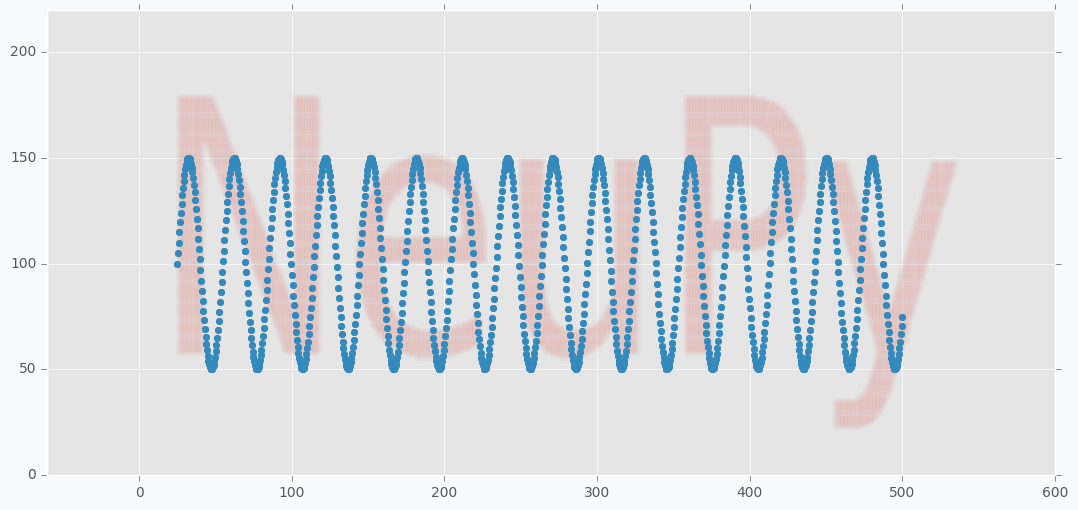

Weight initialization is very important step. With default random initialization it can be difficult for the network to cover the text, since in many cases neurons will have to travel across all image in order to get closer to their neighbors. In order to avoid this issue we have to manually generate grid of neurons, so that it would be easier for the network to cover the text.

I tried many different patterns and most of them work, but one-dimensional or nearly one-dimensional grids produced best patterns. It’s mostly because patterns generated using two-dimensional grid look very similar to each other. With one-dimensional grid it’s like covering the same text with long string. Network will be forced to stretch and rollup in order to cover the text. I mentioned term nearly one-dimensional, because that’s the shape that I used at the end. Term “nearly” means that grid is two-dimensional, but because number of neurons along one dimension much larger than along the other we can think of it as almost one-dimensional. In the final solution I used grid with shape 2x1000.

# This parameter will be also used in the SOFM

n = 1000

# Generate weights and arange them along sine wave.

# Because sine way goes up and down the final pattern

# will look more interesting.

weight = np.zeros((2, n))

# Width of the weights were selected specifically for the NeuPy text

weight[0, :] = np.linspace(25, 500, n)

# Amplitute of the sine function also was selected in order

# to roughly match height of the text

weight[1, :] = (np.sin(np.linspace(0, 100, n)) + 1) * 50 + 50

weight = np.concatenate([weight, weight], axis=1)

You can notice from the code that I applied sine function on the y-axis coordinates of the grid. With two-dimensional grid it’s easy to cover the text. We just put large rectangular grid over the text. With nearly one-dimensional grid it’s a bit tricky. We need to have a way that will allow us to cover our text and sine is one of the simple functions that can provide such a property. From the image below you can see how nicely it cover our text.

plt.figure(figsize=(16, 6))

plt.scatter(*weight, zorder=100, color=blue)

plt.scatter(*data.T, color=red, alpha=0.01)

plt.show()

Training network

And the last step is to train the network. It took me some time to find right parameters for the network. Typically it was easy to see that there is something wrong with a training when all neurons start forming strange shapes that look nothing like the text. The main problem I found a bit latter. Because we have roughly 20,000 data points and 2000 neurons we make to many updates during one iteration without reducing parameter values. Reducing step size helped to solve this issue, because every update makes small change to the grid and making lots of these small changes make noticeable difference.

from neupy import algorithms

sofm = algorithms.SOFM(

n_inputs=2,

features_grid=(2, n),

weight=weight,

# With large number of training samples it's safer

# to use small step (learning rate)

step=0.05,

# Learning radis large for first 10 iterations, after that we

# assume that neurons found good positions on the text and we just

# need to move them a bit independentl in order to cover text better

learning_radius=10,

# after 10 iteration learning radius would be 0

reduce_radius_after=1,

# slowly decrease step size

reduce_step_after=10,

)

Because of the small step size we have to do more training iterations. It takes more time to converge, but final results are more stable to some changes in the input data. It’s possible to speed up the overall process tuning parameter more carefully, but I decided that it’s good enough.

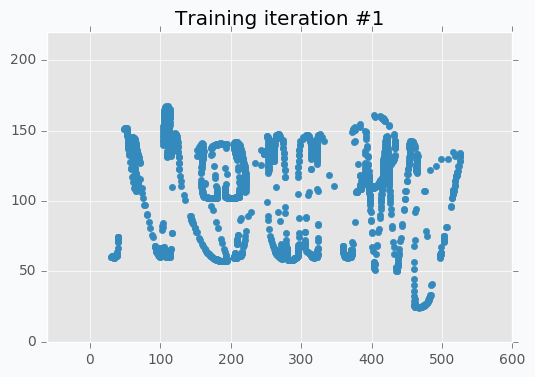

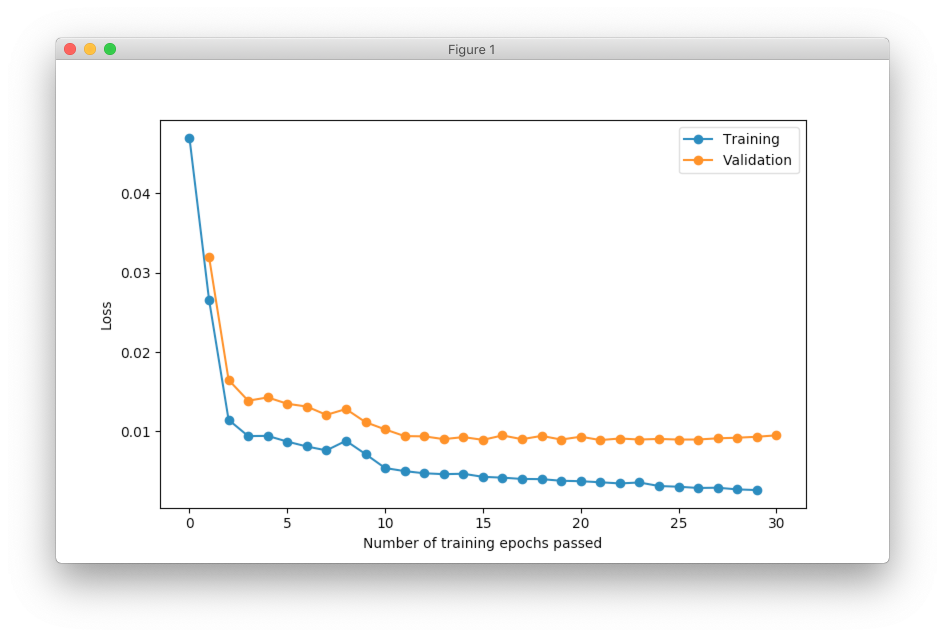

I run training procedure for 30 iterations.

for iteration in range(30):

sofm.train(data, epochs=1)

plt.title('Training iteration #{}'.format(iteration))

plt.scatter(*sofm.weight, color=blue)

plt.show()

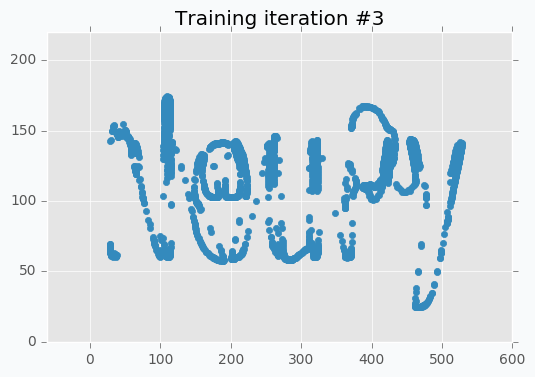

SOFM was trained for only one iteration and we already can vaguely see most of the latters. Let’s wait a few more iterations.

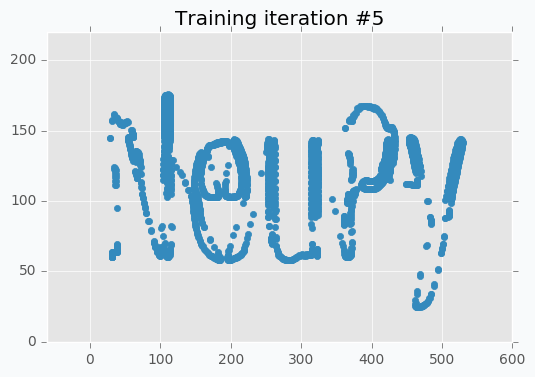

Now it’s way more clear that network makes progress during the training. And after 5 more iterations it’s almost perfectly covers text.

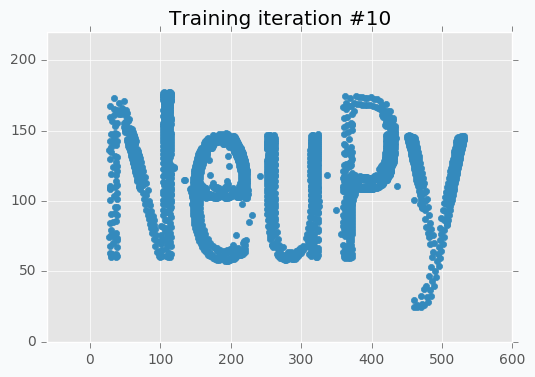

But even after 10 iterations we still can see that some of the letters still require some polishing. For instance, left part of the letter N hasn’t been properly covered.

In addition, it’s important to point out that we specified step reduction after every 10 iterations. It means that now we won’t move neurons as much as we did before. Also, learning radius was reduced to zero, which means that after 10th iteration each neuron will move independently. And these two changes are exactly what we need. We can see from the picture that network covers text pretty good, but small changes will make it look even better.

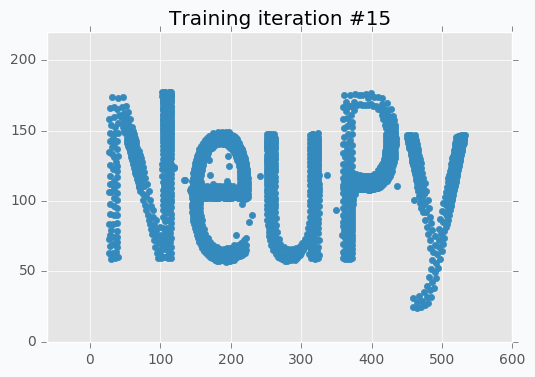

You can notice that there is almost no difference between iteration #15 and #30. It doesn’t look like we made any progress after 15th iteration, but it’s not true. If you stop training after 15th iteration, you will notice that some parts of the letters look a bit odd. These 15 last iterations do small changes that won’t be noticeable from the scatter plot, but they are important.

And finally after all training iterations we can use our weights to generate logo.

# Function comes from the neupy's examples folder

from examples.competitive.utils import plot_2d_grid

background_color = '#22264b'

text_color = '#e8edf3'

fig = plt.figure(figsize=(14, 6))

ax = plt.gca()

ax.patch.set_facecolor(background_color)

sofm_weights = sofm.weight.T.reshape((2, n, 2))

plot_2d_grid(np.transpose(sofm_weights, (2, 0, 1)), color=text_color)

plt.xticks([])

plt.yticks([])

# Coordinates were picked so that text

# will be in the center of the image

plt.ylim(0, 220)

plt.xlim(-10, 560)

plt.show()

Generalized approach for any text

There are some challenges that you can face when you try to adopt this solution for different text. First of all, from the code you could have noticed that I “hard-coded” bounds of the text. In more general solution they can be identified from the image, but it will make solution more complex. For instance, the right bound of the text can be associated with data point that has largest x-coordinate. And the same can be done for the upper bound of the text. Second problem is related to the parameters of the SOFM. The main idea was to make lots of small updates for a long time, but it might fail for some other text that has more letters, because we will have more data points and more updates during each iterations. Problem can be solved if step size will be reduced.

Further reading

If you want to learn more about SOFM, you can read the “Self-Organizing Maps and Applications” article that covers basic ideas behind SOFM and some of the problems that can be solved with this algorithm.

Code

All the code that was used to generate images in the article you can find in iPython notebook on github.

The Art of SOFM

Introduction

In this article, I just want to show how beautiful sometimes can be a neural network. I think, it’s quite rare that algorithm can not only extract knowledge from the data, but also produce something beautiful using exactly the same set of training rules without any modifications.

The main idea

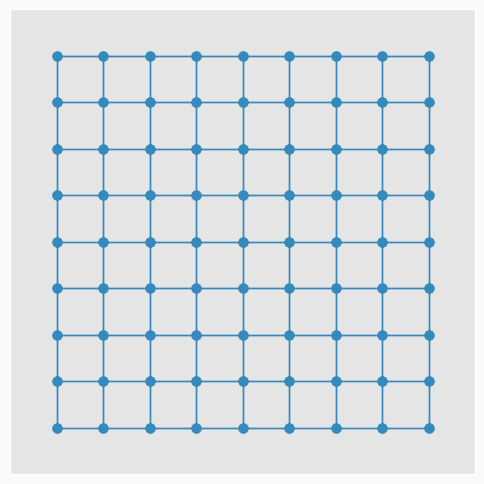

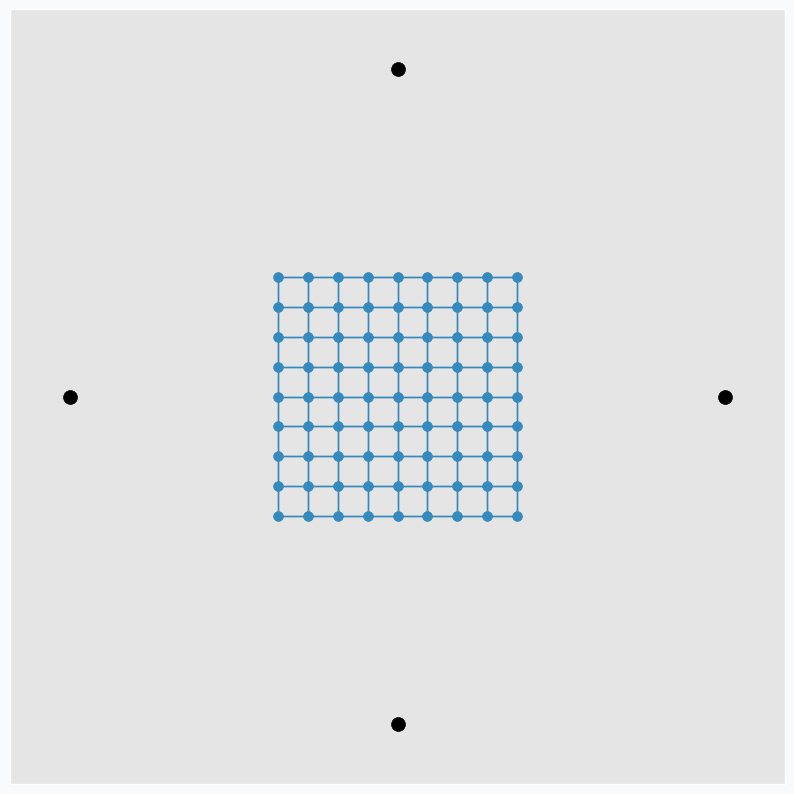

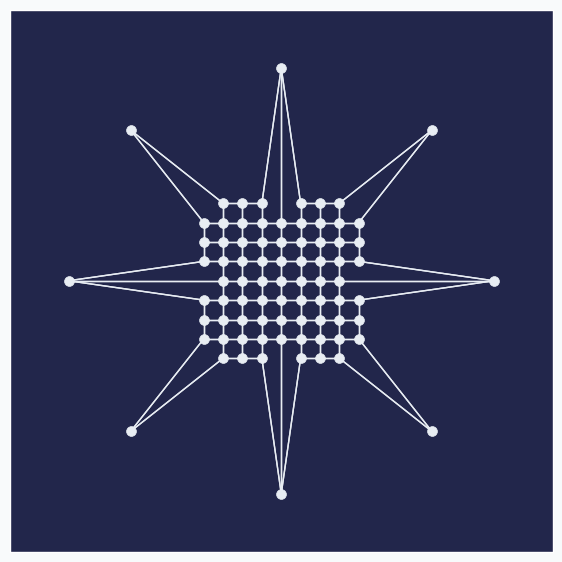

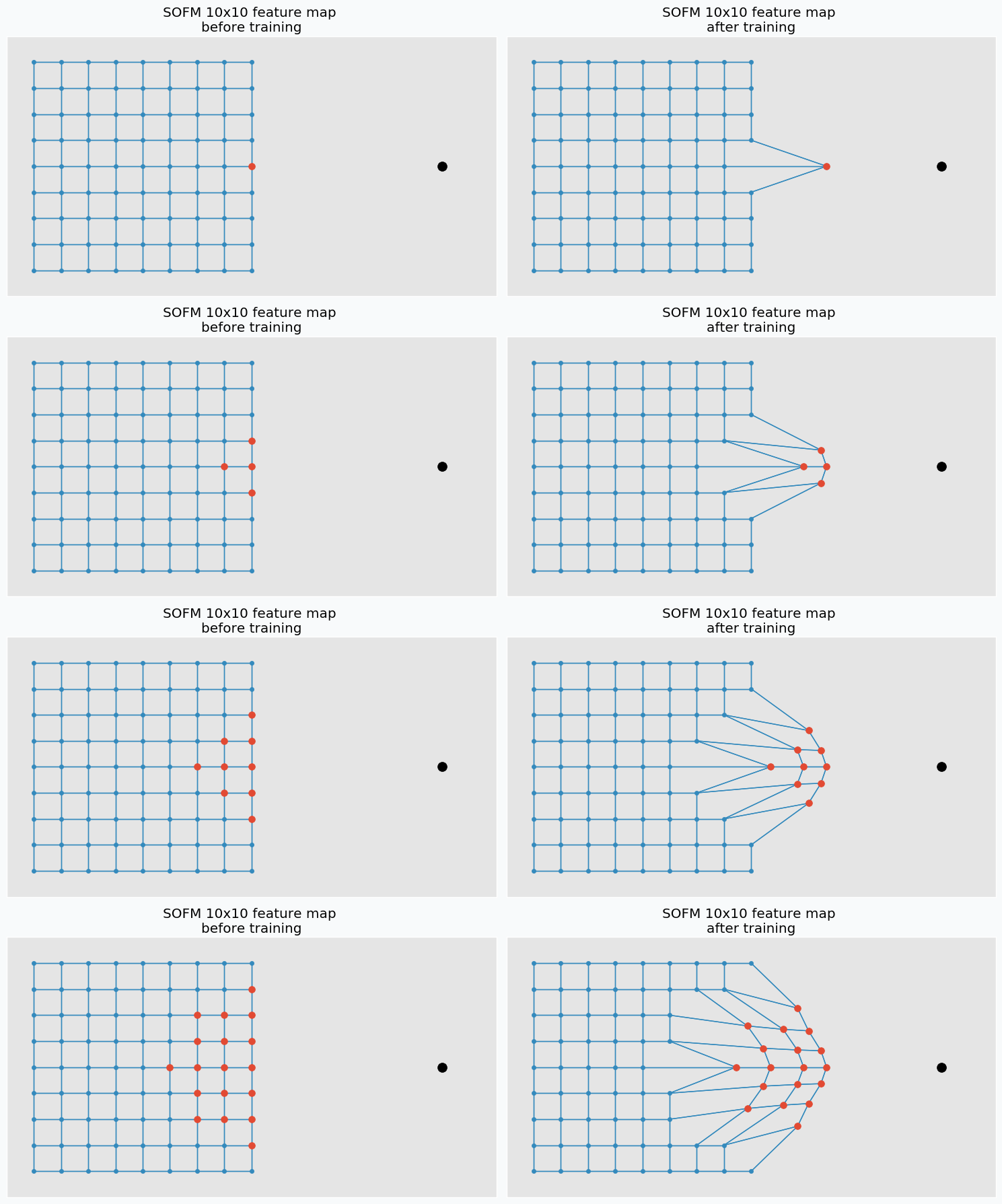

SOFM consists of multiple neurons that typically arranged in the grid with connections between some of the neurons. In this article, we will use grid in the shape of the square that looks like this.

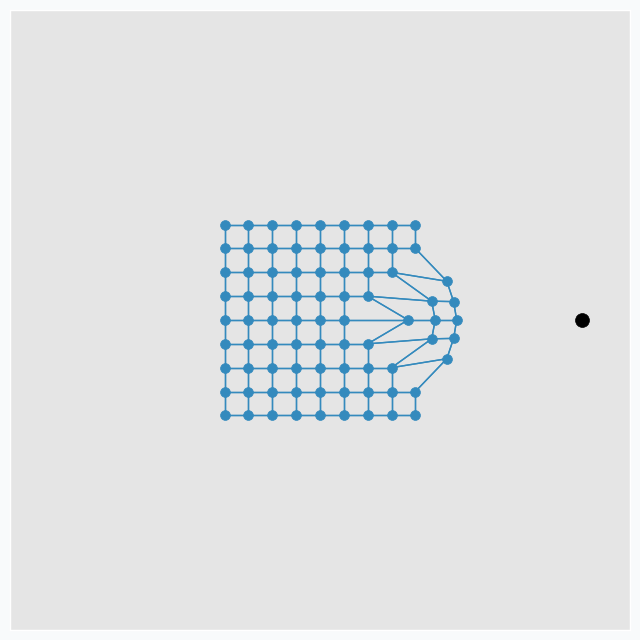

During the training iteration we introduce some data point near the grid.

SOFM finds neuron that closest to the introduced data point. After that, it pushes neuron towards this data point. In addition, it finds a few neighbour neurons within specified radius, that we call learning radius, and pushes these neurons towards data point as well, but not as much as it pushed closest neuron.

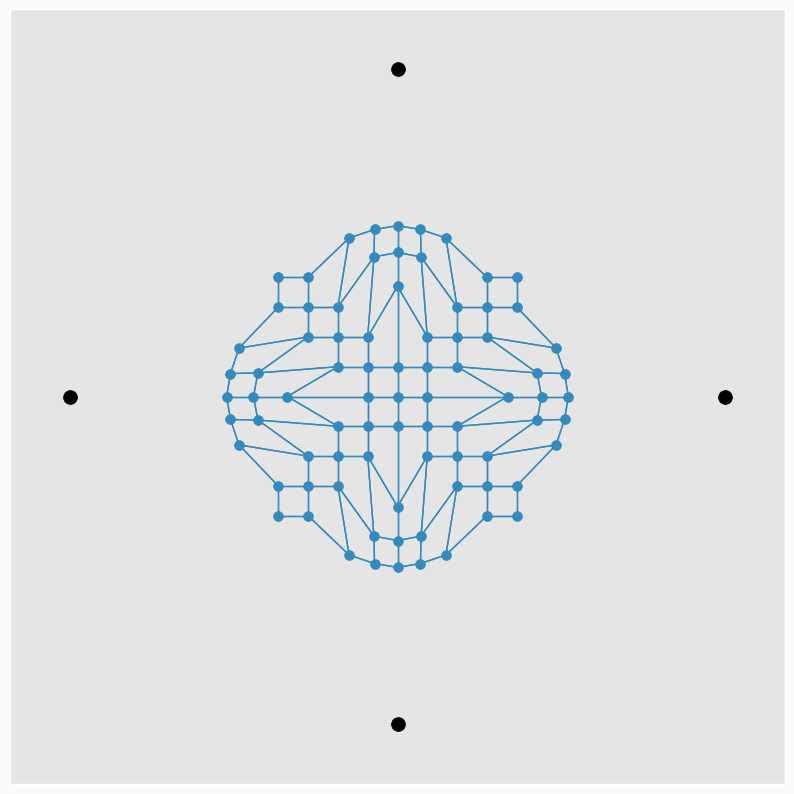

To make it more interesting, we can put few extra points around the same grid.

And applying one update per each data point at a time we can get nice pattern.

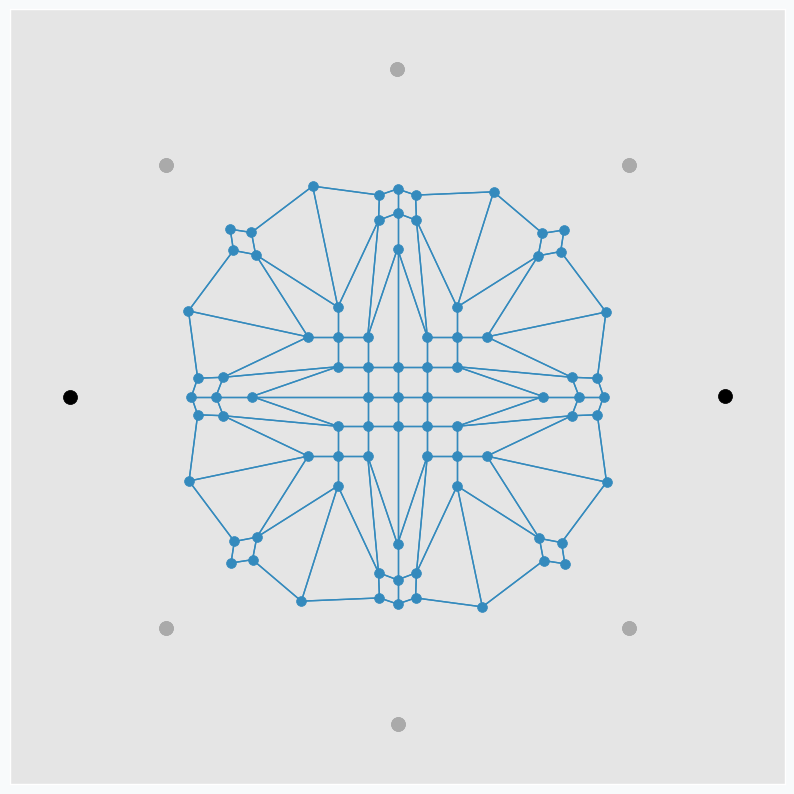

We can make patterns look more interestingly if we start moving data points around. Let’s now use only two points and put one on the left and one on the right side from the grid. We do the same SOFM update again, but as soon as it’s done we rotate two data points by 45 degrees counterclockwise. After repeating this process a few times, we can get another pattern.

Black dots are two initial data points. And gray dots show places where we’ve seen these two data points after each new 45 degree rotation.

It’s pretty cool that this simple approach can produce such a beautiful patterns. We can even define set of variables changing which we can generate different patterns.

Making patterns more interesting

Randomizing some of the SOFM parameters we can produce different patterns on each run. In this article, we will use 3 most important SOFM parameters:

- Learning rate

- Learning radius

- Learning rate for the neighbour neurons

Learning rate defines by how much we will push neurons during the updated. If learning rate value is small then we won’t push it to far from the initial position.

Learning radius defines how many neighbour neurons will be updated. The larger the radius the more neighbours would be updated after each iteration. If learning radius equal to zero then only one neuron will be updated.

And the last one is a parameter that controls learning rate for the neighbour neurons. The larger the value the bigger update neighbour neurons will get.

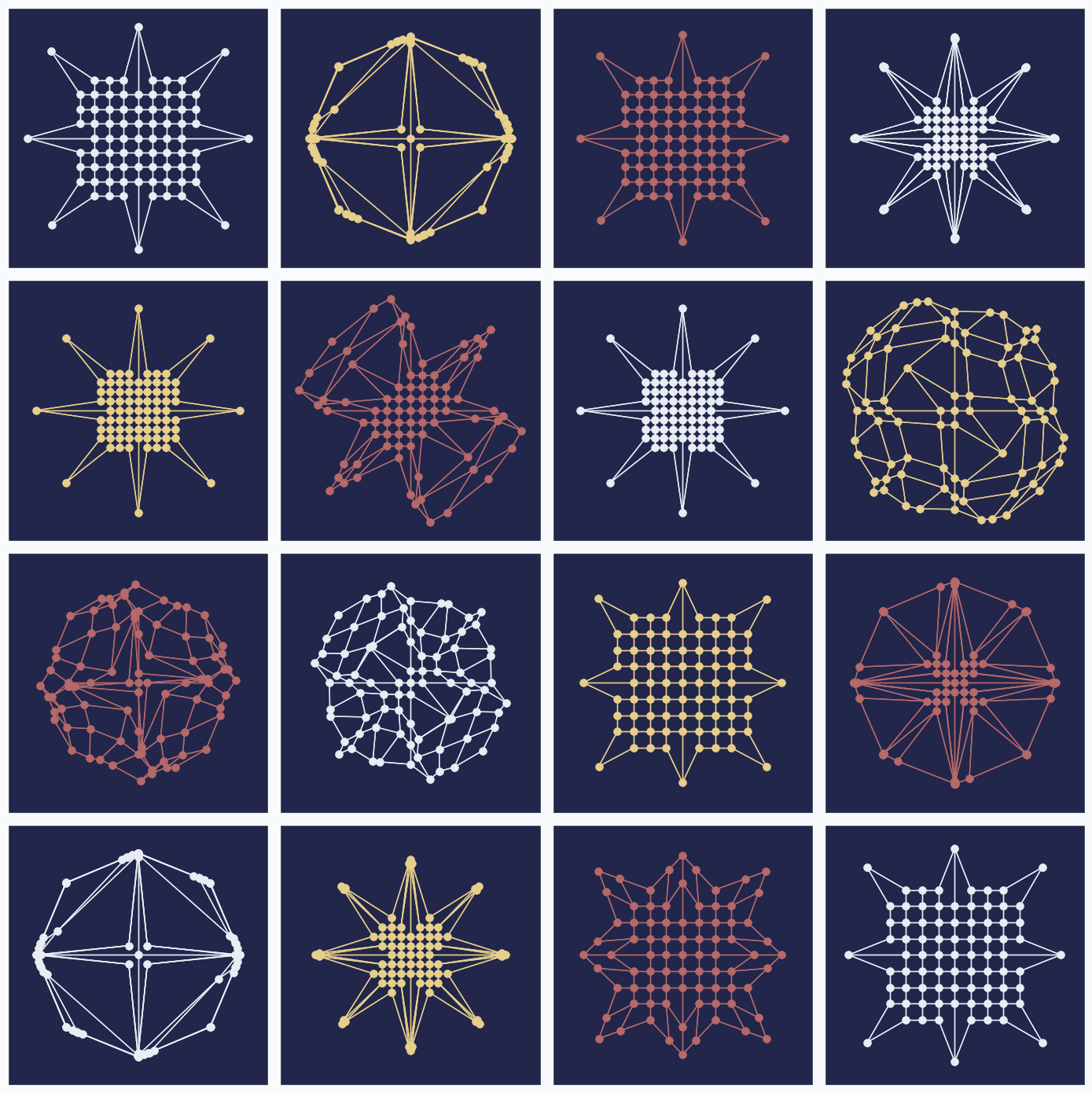

Generate interesting patterns

As in the example before, we will use only two data points and we will rotate them after each update by 45 degree counterclockwise. Each of the 3 SOFM parameter we randomly sample from the uniform distribution. Here are 16 randomly generated patterns.

You can see that even small changes in some of the parameters can produce very different results. One of the patterns even look like a bird.

Applications

It looks a bit strange to think about this approach in context of practical applications, but there are some things that you can do with these patterns. For instance, it can be used to generate unique avatar images for the new website users. Adding more rules and variables to the image generating function we can make patterns even more diverse.

I also tried to play a game with these patterns in order to train my intuition. We use SOFM network parameters in order to generate these image, but reverse procedure is also possible. Seeing the generated pattern we can guess parameters that was used to generate it. For instance, here is a simple example of the pattern that looks like star

We can see that only one dot moved in each direction, so we can say that learning radius was zero. Since updated neurons moved far away from the initial grid we can say that learning rate was large.

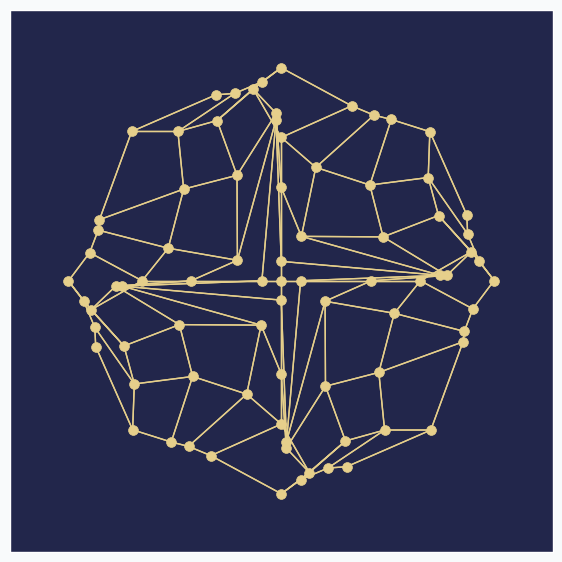

Here is another one, more complicated.

In this example it’s harder to say what happened, but we have at least one clue.In the center of the grid there are three dots, arranged horizontally, that didn’t move after all updates. Knowing that there are nine dots in the row, we can conclude that learning radius was 2 or 3. We not sure which one, but if you see that there are just 5 dots left on their initial positions then it’s very likely that radius was 3 since we moved more neurons into new positions.

I hope you got the idea. It’s not always possible to guess the exact values, but each pattern can reveal some clues about the algorithm’s set up.

Further reading

If you want to learn more about SOFM, you can check article that covers basic ideas behind SOFM and some of the problems that can be solved with this algorithm.

Code

All the code that was used to generate images in the article you can find in iPython notebook on github.

Self-Organizing Maps and Applications

Contents

Introduction

I was fascinated for a while with SOFM algorithm and that’s why I decided to write this article. The reason why I like it so much because it’s pretty simple and powerful approach that can be applied to solve different problems.

I believe that it’s important to understand the idea behind any algorithm even if you don’t know how to build it. For this reason, I won’t give detailed explanation on how to build your own SOFM network. Instead, I will focus on the intuition behind this algorithm and applications where you can use it. If you want to build it yourself I recommend you to read Neural Network Design book.

Intuition behind SOFM

As in case of any neural network algorithms the main building blocks for SOFM are neurons. Each neuron typically connected to some other neurons, but number of this connections is small. Each neuron connected just to a few other neurons that we call close neighbors. There are many ways to arrange these connections, but the most common one is to arrange them into two-dimensional grid.

Each blue dot in the image is neuron and line between two neurons means that they are connected. We call this arrangement of neurons grid.

Each neuron in the grid has two properties: position and connections to other neurons. We define connections before we start network training and position is the only thing that changes during the training. There are many ways to initialize position for the neurons, but the easiest one is just to do it randomly. After this initialization grid won’t look as nice as it looks on the image above, but with more training iteration problem can be solved.

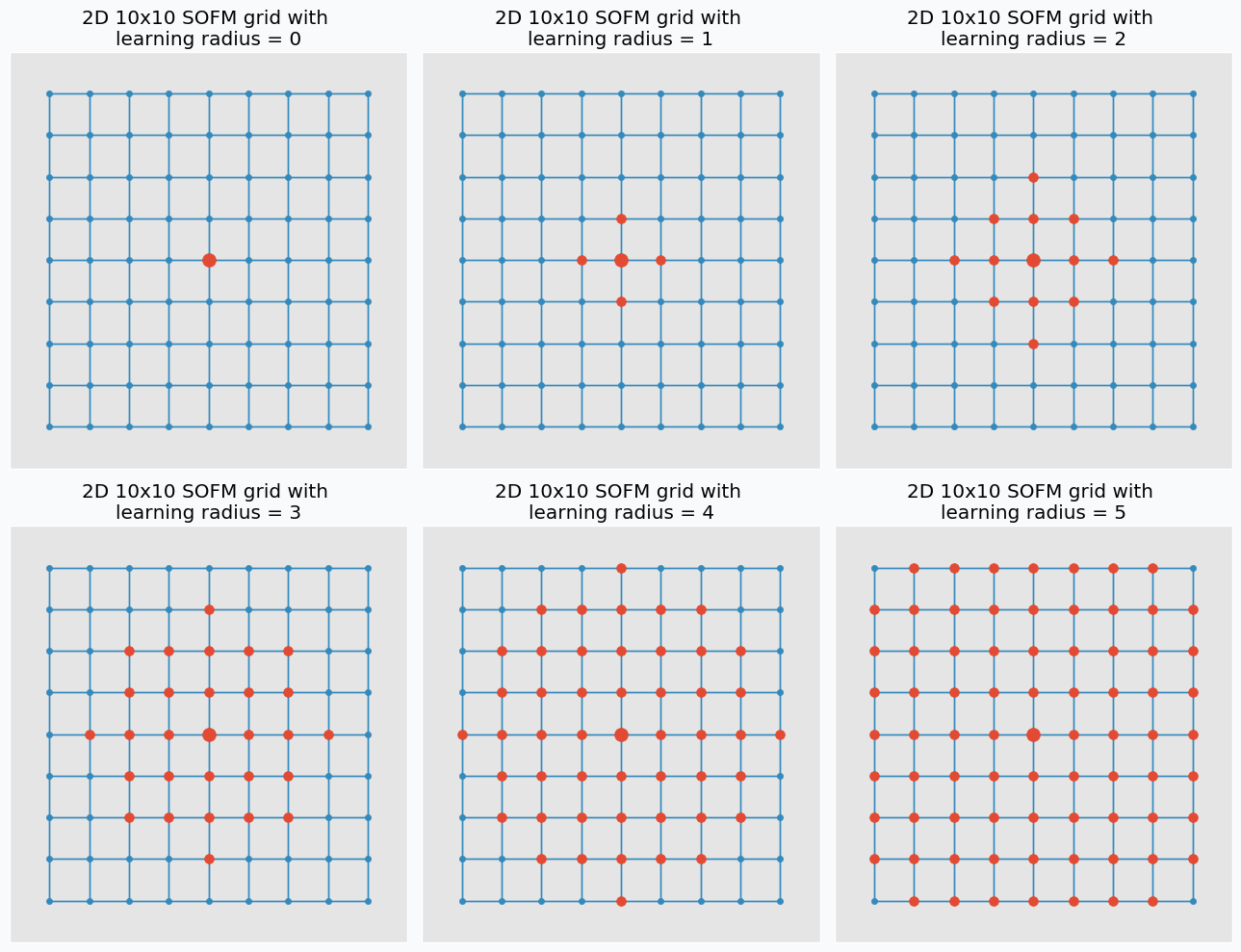

Let’s talk about training. In each training iteration we introduce some data point and we try to find neuron that closest to this point. Neuron that closest to this point we call neuron winner. But, instead of updating position of this neuron we find its neighbors. Note, that it’s not the same as closest neighbors. Before training we specify special parameter known as learning radius. It defines the radius within which we consider other neuron as a neighbors. On the image below you can see the same grid as before with neuron in center that we marked as a winner. You can see in the pictures that larger radius includes more neurons.

And at the end of the iteration we update our neuron winner and its neighbors positions. We change their position by pushing closer to the data point that we used to find neuron winner. We “push” winner neuron much closer to the data point compared to the neighbor neurons. In fact, the further the neighbors the less “push” it get’s towards the data point. You can see how we update neurons on the image below with different learning radius parameters.

You probably noticed that idea is very similar to k-means algorithm, but what makes it really special is the existing relations with other neurons.

It’s easy to compare this algorithm to real world. Imagine that you try to put large tablecloth on the large table. First you put it so that it will partially cover table. Then you will go around and pull different sides of the tablecloth until you cover the table. But when you pull one side, another part of the tablecloth starts moving to the direction in which you pull it, just like it happens during the training in SOFM.

Applications

Surprisingly, this simple idea has a variety of applications. In this part of the article, I’ll cover a few most common applications.

Clustering

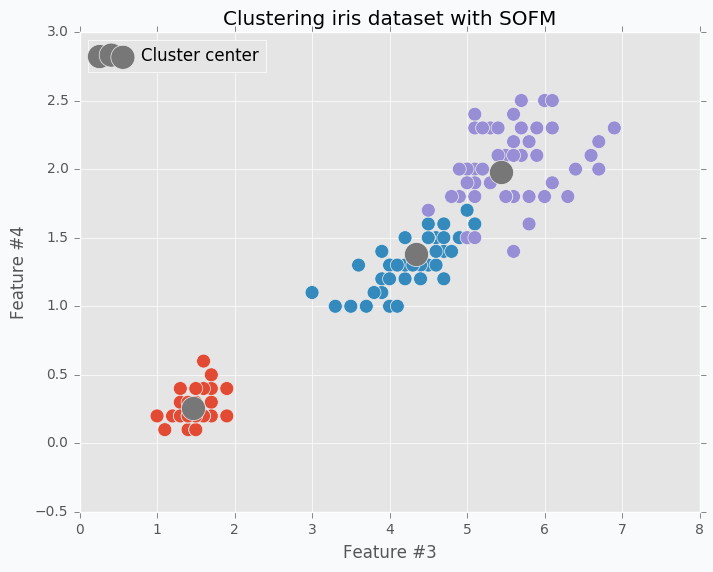

Clustering is probably the most trivial application where you can use SOFM. In case of clustering, we treat every neuron as a centre of separate cluster. One of the problems is that during the training procedure when we pull one neuron closer to one of the cluster we will be forced to pull its neighbors as well. In order to avoid this issue, we need to break relations between neighbors, so that any update will not have influence on other neurons. If we set up this value as 0 it will mean that neuron winner doesn’t have any relations with other neurons which is exactly what we need for clustering.

In the image below you can see visualized two features from the iris dataset and there are three SOFM neurons colored in grey. As you can see it managed to find pretty good centers of the clusters.

$ python sofm_iris_clustering.py

Clustering application is the useful one, but it’s not very special one. If you try to run k-mean algorithm on the same dataset that I used in this example you should be able to get roughly the same result. I don’t see any advantages for SOFM with learning radius equal to 0 against k-means. I like to think about SOFM clustering application more like a debugging. When you are trying to find where your code breaks you can disable some parts of it and try to see if the specific function breaks. With SOFM we are disabling some parts in order to see how other things will behave without it.

What would happen if we increase number of clusters? Let’s increase number of clusters from 3 to 20 and run clustering on the same data.

Neurons just spread out all over the data trying to cover it. Just in this case, since we have lots of clusters each one will cover smaller portion of the data. We can call it a micro-clustering.

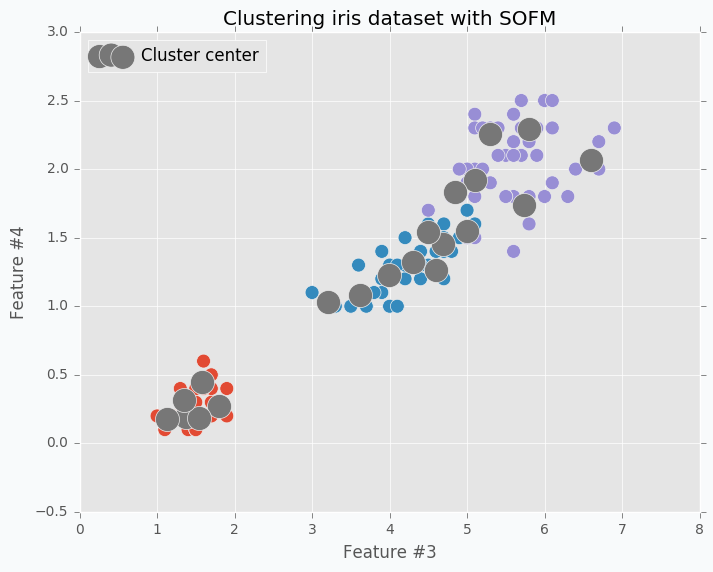

Space approximation

In the previous example, we tried to do a space approximation. Space approximation is similar to clustering, but the goal is here to find the minimum number of points that cover as much data as possible. Since it’s similar to clustering we can use SOFM here as well. But as we saw in the previous example data points wasn’t using space efficiently and some points were very close to each other and some are further. Now the problem is that clusters don’t know about existence of other clusters and they behave independently. To have more cooperative behavior between clusters we can enable learning radius in SOFM. Let’s try different example. I generated two-dimensional dataset in the shape of the moon that we will try to approximate using SOFM. First, let’s try to do it without increasing learning radius and applying the same micro-clustering technique as before.

As you can see we have the same issue as we had with iris dataset. On the left side there are a few cluster centers that very close to each other and on the right side they are further apart. Now, let’s try to set up learning radius equal to 2 and let’s look what will happen.

$ python sofm_moon_topology.py

You can see that cluster centers are more efficiently distributed along the moon-shaped cluster. Even if we remove data points from the plot the center cluster will give us good understanding about the shape of our original data.

You might ask, what is the use of this application? One of the things that you can do is to use this approach in order to minimize the size of your data sample. The idea is that since feature map spreads out all over the space you can generate smaller dataset that will keep useful properties of the main one. It can be not only useful for training sample minimization, but also for other applications. For instance, in case if you have lots of unlabelled data and labelling can get expensive, you can use the same technique to find smaller sub-sample of the main dataset and label only this subset instead of the random sample.

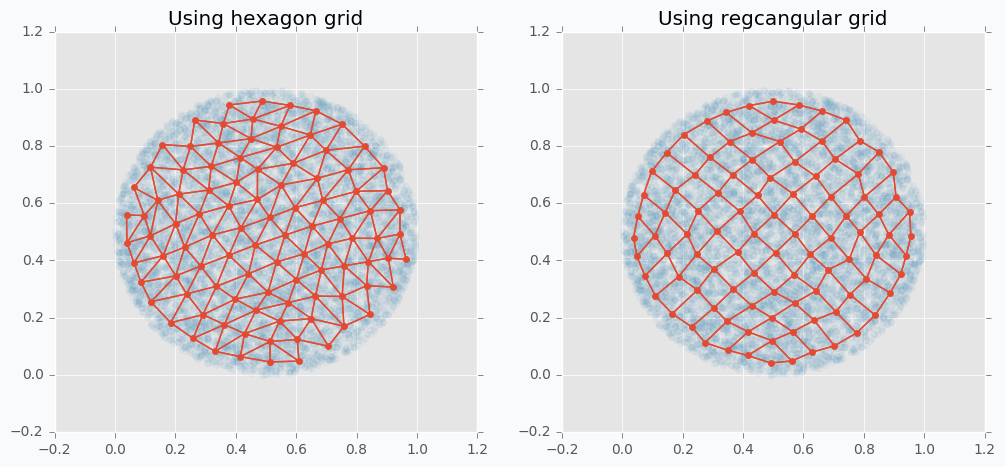

We can use more than one-dimensional grids in SOFM in order to be able to capture more complicated patterns. In the following example, you can see SOFM with two-dimensional feature map that approximates roughly 8,000 data points using only 100 features.

$ python sofm_compare_grid_types.py

The same property of space approximation can be extended to the high-dimensional datasets and used for visualizations.

High-dimensional data visualization

We used SOFM with two-dimensional feature map in order to catch dimensional properties of the datasets with only two features. If we increase number of dimensions to three it still would be possible to visualize the result, but in four dimensions it will become a bit trickier.

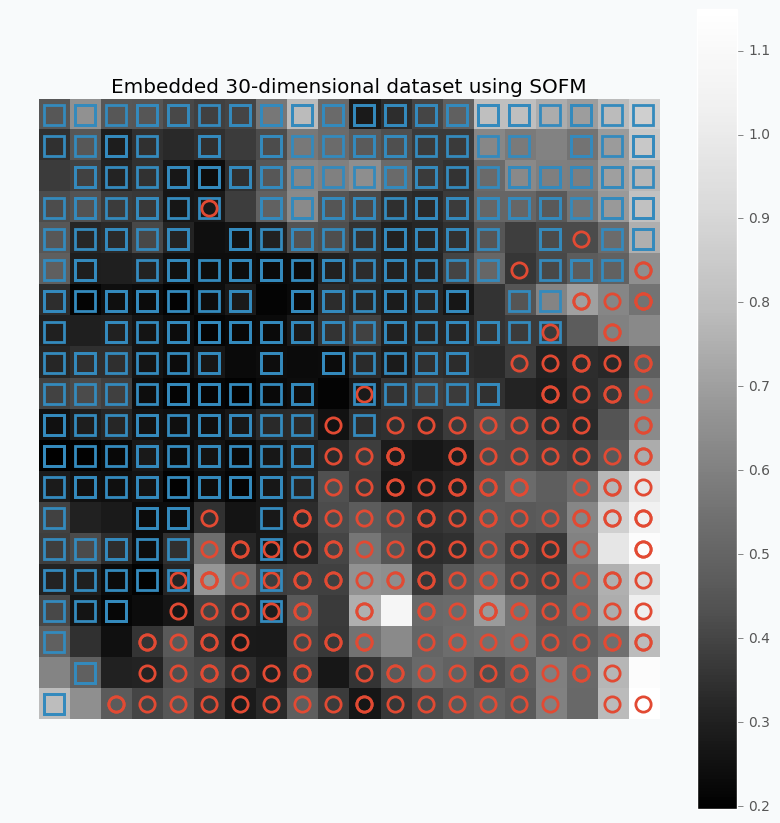

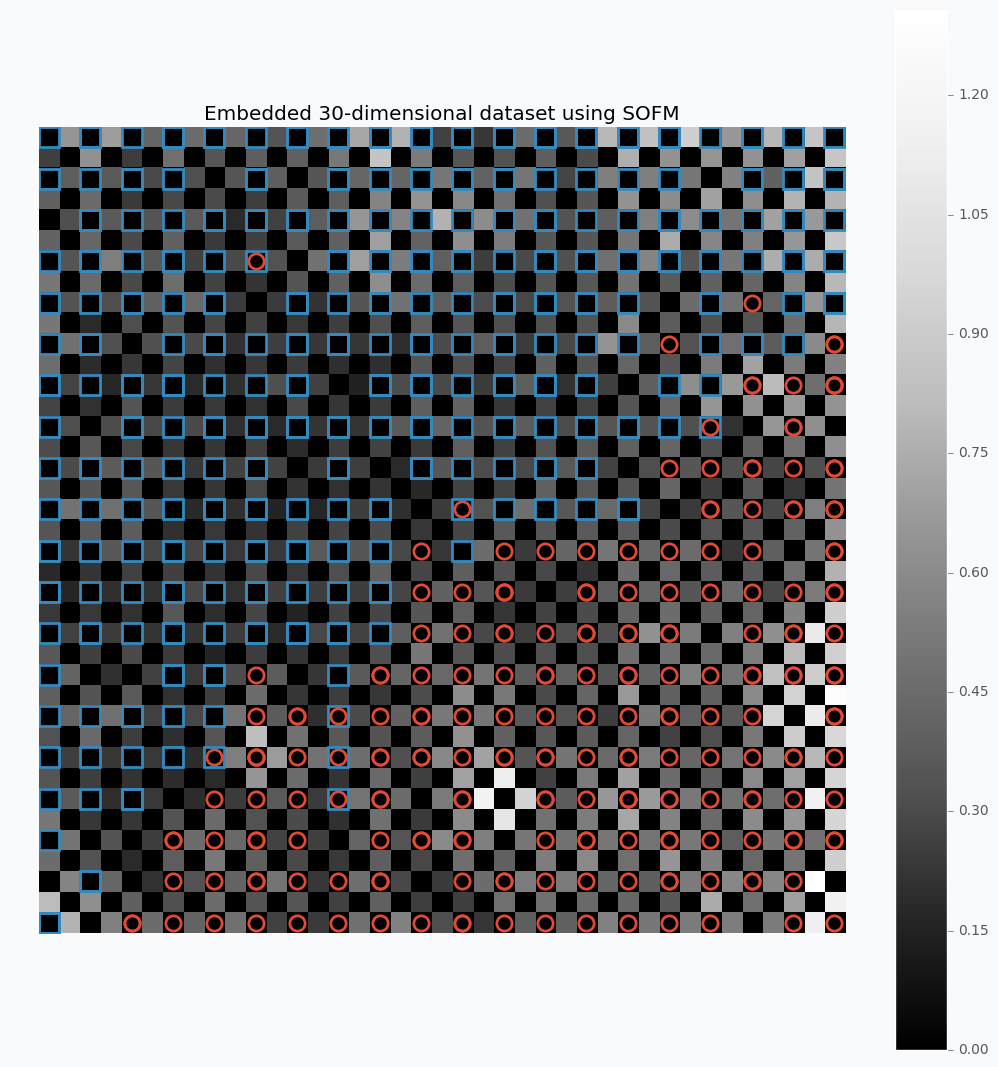

If we use two-dimensional grid and train SOFM over the high-dimensional data then we can encode network as a heat map where each neuron in the network will be represented by the average distance to its neighbors.

As the example, let’s take a look at the breast cancer dataset available in the scikit-learn library. This dataset has 30 features and two classes.

Let’s look what we can get if we apply described method on the 30-dimensional data.

$ python sofm_heatmap_visualization.py

For this example, I used SOFM with 20x20 feature map. Which basically means that we have 400 micro-clusters. Most of the micro-clusters has either blue squares or red circles and just a few of them has both or none of the classes.

You can see how micro-clusters with blue squares are tended to be close to each other, and the same true for red circles. In fact, we can even draw simple bound that will separate two different classes from each other. Along this bound we can see some cases where micro-cluster has red and blue classes which means that at some places these samples sit very tight. In other cases, like in the left down corner, we can see parts that do not belong to any of the classes which means that there is a gap between data points.

You can also notice that each cell in the heat map has different color. From the colorbar, we can see that black color encodes small numbers and white color encodes large numbers. Each cell has a number associated with it that defines average distance to neighbor clusters. The white color means that cluster is far away from it’s neighbors. Group of the red circles on the right side of the plot has white color, which means that this group is far from the main cluster.

One problem is that color depends on the average distance which can be misleading in some cases. We can build a bit different visualization that will encode distance between two separate micro-clusters as a single value.

$ python sofm_heatmap_visualization.py --expanded-heatmap

Now between every feature and its neighbor there is an extra square. As in the previous example each square encodes distance between two neighboring features. We do not consider two features in the map as neighbors in case if they connected diagonally. That’s why all diagonal squares between two micro-clusters color in black. Diagonals are a bit more difficult to encode, because in this case we have two different cases. In order to visualize it we can also take an average of these distances.

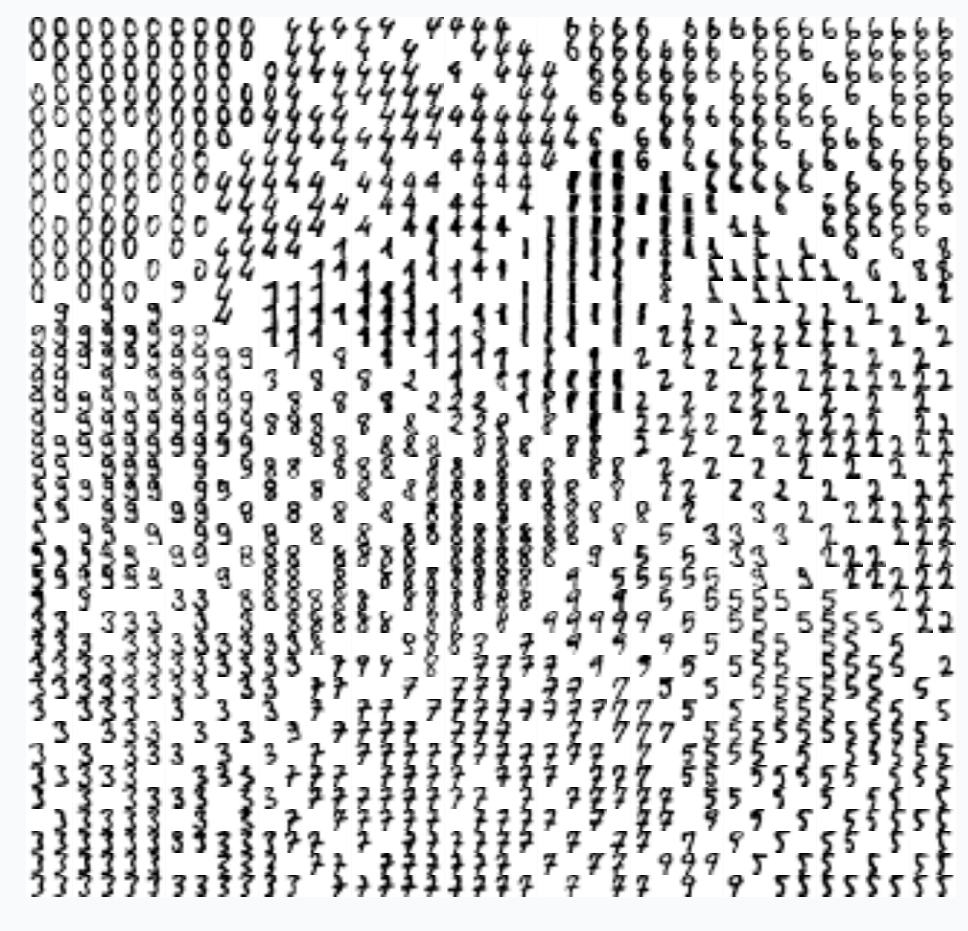

More interesting way to make this type of visualization can be with the use of images. In previous case, we use markers to encode two different classes. With images, we can use them directly as the way to represent the cluster. Let’s try to apply this idea on small dataset with images of digits from 0 to 9.

$ python sofm_digits.py

Visualize pre-trained VGG19 network

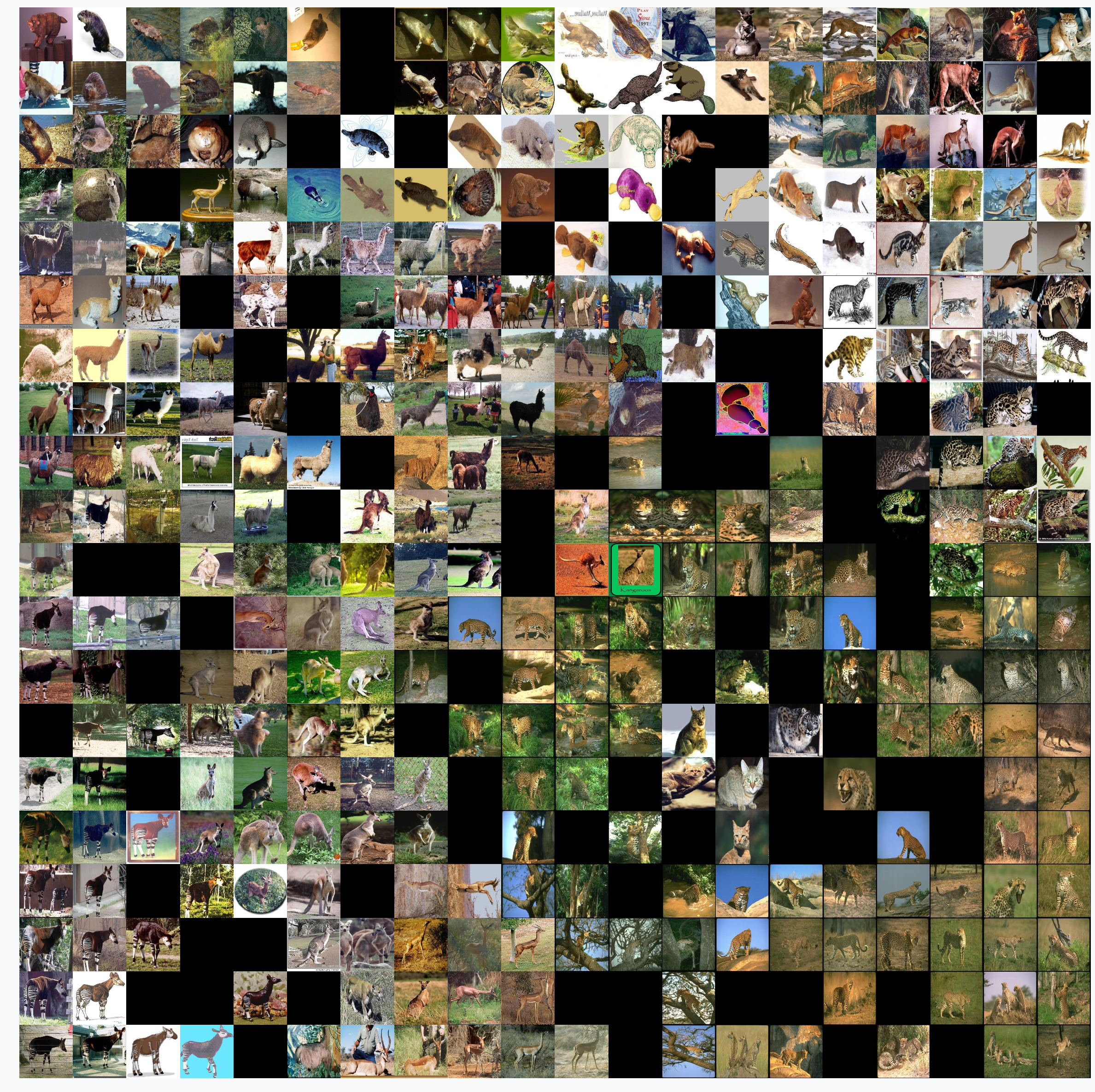

Using the same techniques, we can look inside the deep neural networks. In this section, I will be looking on the pre-trained VGG19 network using ImageNet data. Only in this case, I decided to make it a bit more challenging. Instead of using data from ImageNet I decided to pick 9 classes of different animal species from Caltech 101 dataset. The interesting part is that there are a few species that are not in the ImageNet.

The goal for this visualization is not only to see how the VGG19 network will separate different classes, but also to see if it would be able to extract some special features of the new classes that it hasn’t seen before. This information can be useful for the Transfer Learning, because from the visualization we should be able to see if network can separate unknown class from the other. If it will then it means there is no need to re-train all layers below the one which we are visualizing.

From the Caltech 101 dataset I picked the following classes:

There are a few classes that hasn’t been used in ImageNet, namely Okapi, Wild cat and Platypus.

Data was prepared in the same way as it was done for the VGG19 during training on ImageNet data. I first removed final layer from the network. Now output for each image should be 4096-dimensional vector. Because of the large dimensional size, I used cosine similarity in order to find closest SOFM neurons (instead of euclidian which we used in all previous examples).

Even without getting into the details it’s easy to see that SOFM produces pretty meaningful visualization. Similar species are close to each other in the visualization which means that the main properties was captured correctly.

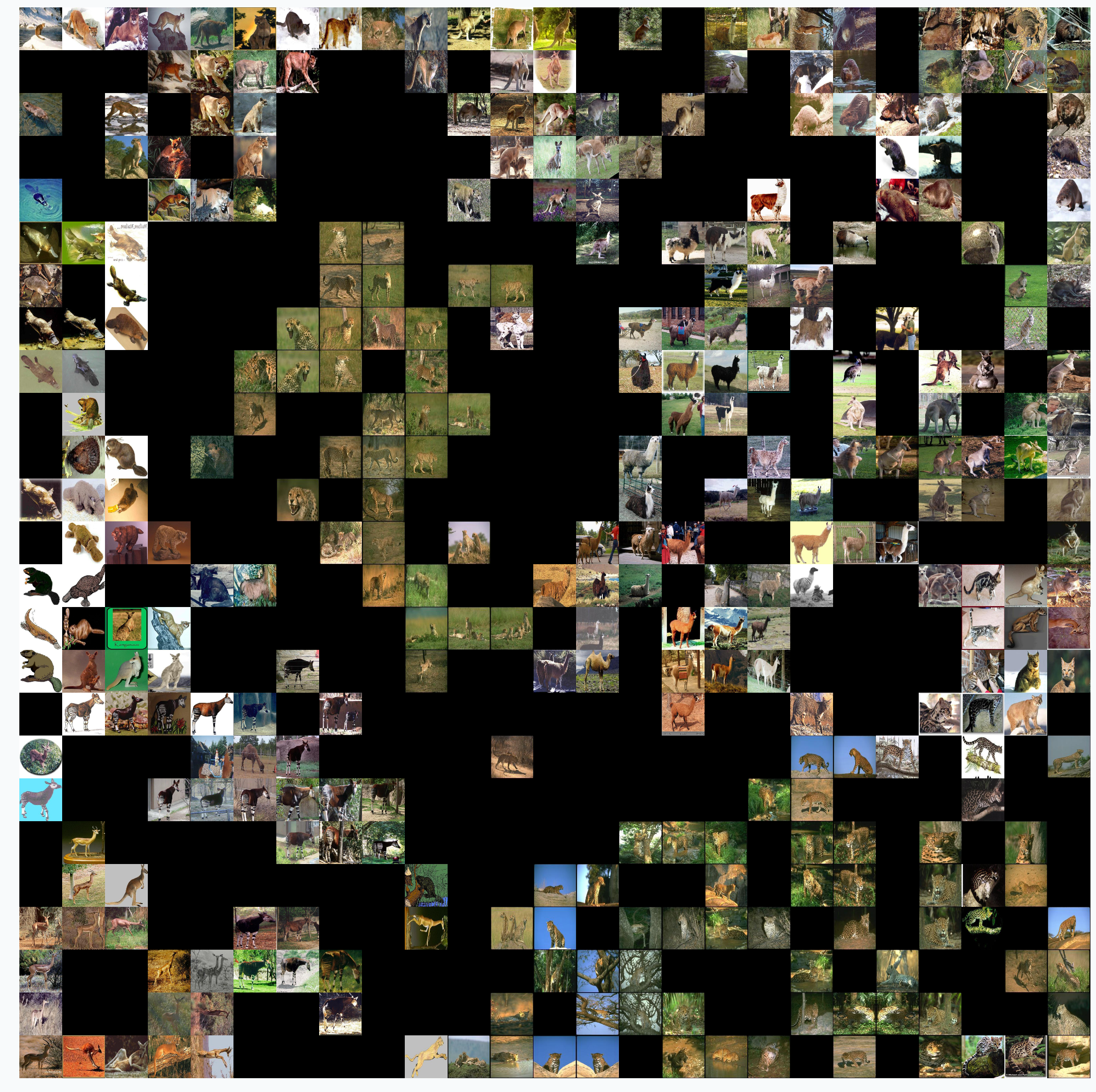

We can also visualize output from the last layer. From the network, we only need to remove final Softmax layer in order to get raw activation values. Using this values, we can also visualize our data.

SOFM managed to identify high-dimensional structure pretty good. There are many interesting things that we can gain from this image. For instance, beaver and platypus share similar features. Since platypus wasn’t a part of the ImageNet dataset it is a reasonable mistake for the network to mix these species.

You probably noticed that there are many black squares in the image. Each square represents a gap between two micro-clusters. You can see how images of separate species are separated from other species with these gaps.

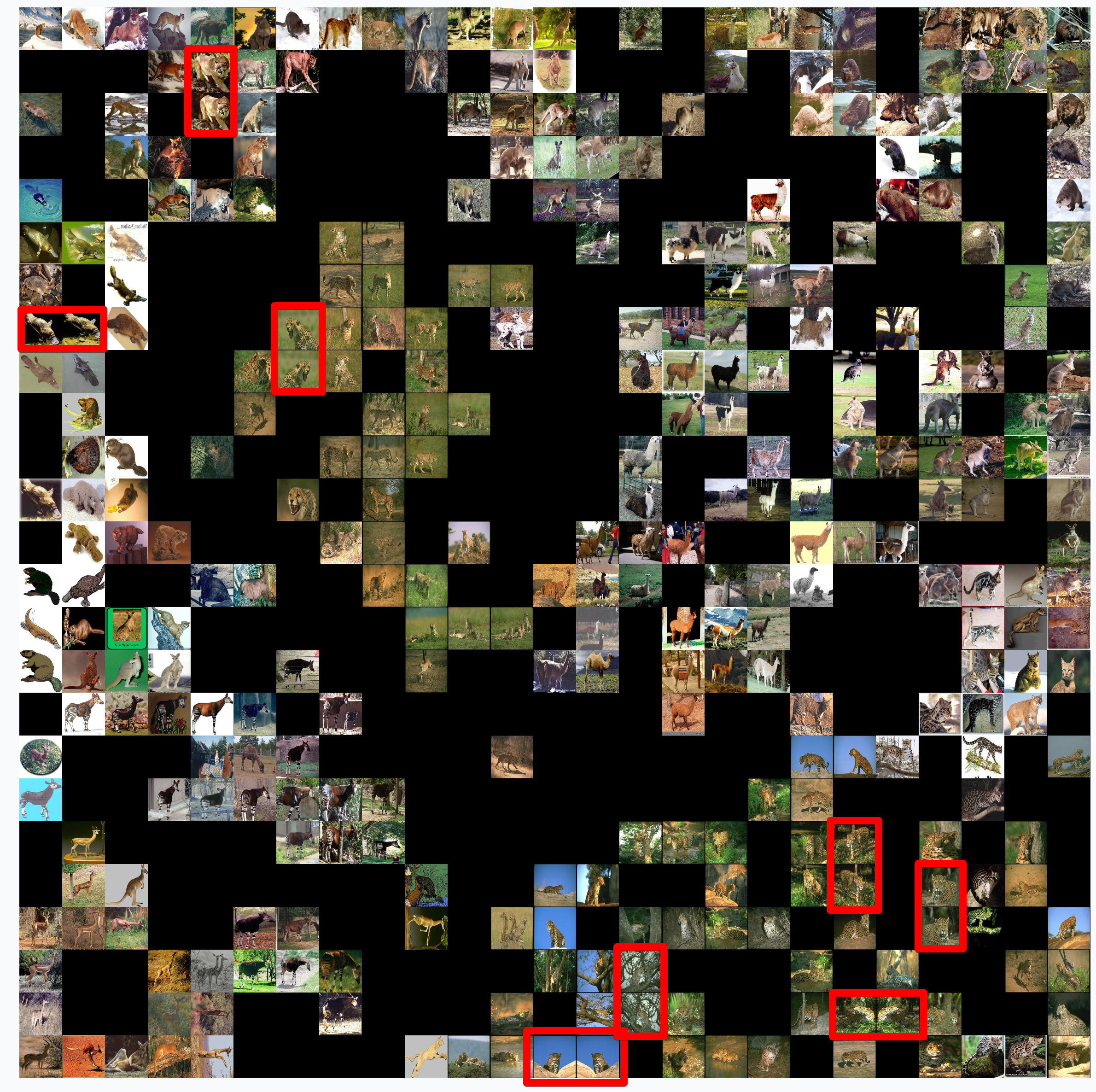

You can also see that network learned to classify rotated and scaled images very similarly which tells us that it is robust against small transformations applied to the image. In the image below, we can see a few examples.

There are also some things that shows us problems with VGG19 network.. For instance, look at the image of llama that really close to the cheetah’s images.

This image looks out of place. We can check top 5 classes based on the probability that network gives to this image.

llama : 31.18%

cheetah, chetah, Acinonyx jubatus : 22.62%

tiger, Panthera tigris : 8.20%

lynx, catamount : 7.34%

snow leopard, ounce, Panthera uncia : 5.91%

Prediction is correct, but look at the second choice. Percentage that it might be a cheetah is also pretty high. Even though cheetah and llama species are not very similar to each other, network still thinks that it can be a cheetah. The most obvious explanation of this phenomena is that llama in the image covered with spots all over the body which is a typical feature for cheetah. This example shows how easily we can fool the network.

Summary

In the article, I mentioned a few applications where SOFM can be used, but it’s not the full list. It can be also used for other applications like robotics or even for creating some beautiful pictures. It is fascinating how such a simple set of rules can be applied in order to solve very different problems.

Despite all the positive things that can be said about SOFM there are some problems that you encounter.

- There are many hyperparameters and selecting the right set of parameter can be tricky.

- SOFM doesn’t cover borders of the dataspace which means that area, volume or hypervolume of the data will be smaller than it is in real life. You can see it from the picture where we approximate circles.

It also means that if you need to pick information about outliers from your data - SOFM will probably miss it.

- Not every space approximates with SOFM. There can be some cases where SOFM fits data poorly which sometimes difficult to see.

Code

iPython notebook with code that explores VGG19 using SOFM available on github. NeuPy has Python scripts that can help you to start work with SOFM or show you how you can use SOFM for different applications.

- Simple SOFM example

- Clustering iris dataset using SOFM

- Learning half-circle topology with SOFM

- Compare feature grid types for SOFM

- Compare weight initialization methods for SOFM

- Visualize digit images in 2D space with SOFM

- Embedding 30-dimensional dataset into 2D and building heatmap visualization for SOFM

Hyperparameter optimization for Neural Networks

Contents

Introduction

Sometimes it can be difficult to choose a correct architecture for Neural Networks. Usually, this process requires a lot of experience because networks include many parameters. Let’s check some of the most important parameters that we can optimize for the neural network:

- Number of layers

- Different parameters for each layer (number of hidden units, filter size for convolutional layer and so on)

- Type of activation functions

- Parameter initialization method

- Learning rate

- Loss function

Even though the list of parameters in not even close to being complete, it’s still impressive how many parameters influences network’s performance.

Hyperparameter optimization

In this article, I would like to show a few different hyperparameter selection methods.

- Grid Search

- Random Search

- Hand-tuning

- Gaussian Process with Expected Improvement

- Tree-structured Parzen Estimators (TPE)

Grid Search

The simplest algorithms that you can use for hyperparameter optimization is a Grid Search. The idea is simple and straightforward. You just need to define a set of parameter values, train model for all possible parameter combinations and select the best one. This method is a good choice only when model can train quickly, which is not the case for typical neural networks.

Imagine that we need to optimize 5 parameters. Let’s assume, for simplicity, that we want to try 10 different values per each parameter. Therefore, we need to make 100,000 (\(10 ^ 5\)) evaluations. Assuming that network trains 10 minutes on average we will have finished hyperparameter tuning in almost 2 years. Seems crazy, right? Typically, network trains much longer and we need to tune more hyperparameters, which means that it can take forever to run grid search for typical neural network. The better solution is random search.

Random Search

The idea is similar to Grid Search, but instead of trying all possible combinations we will just use randomly selected subset of the parameters. Instead of trying to check 100,000 samples we can check only 1,000 of parameters. Now it should take a week to run hyperparameter optimization instead of 2 years.

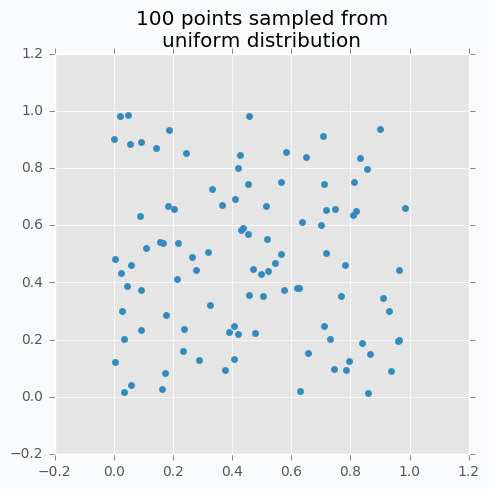

Let’s sample 100 two-dimensional data points from a uniform distribution.

In case if there are not enough data points, random sampling doesn’t fully covers parameter space. It can be seen in the figure above because there are some regions that don’t have data points. In addition, it samples some points very close to each other which are redundant for our purposes. We can solve this problem with Low-discrepancy sequences (also called quasi-random sequences).

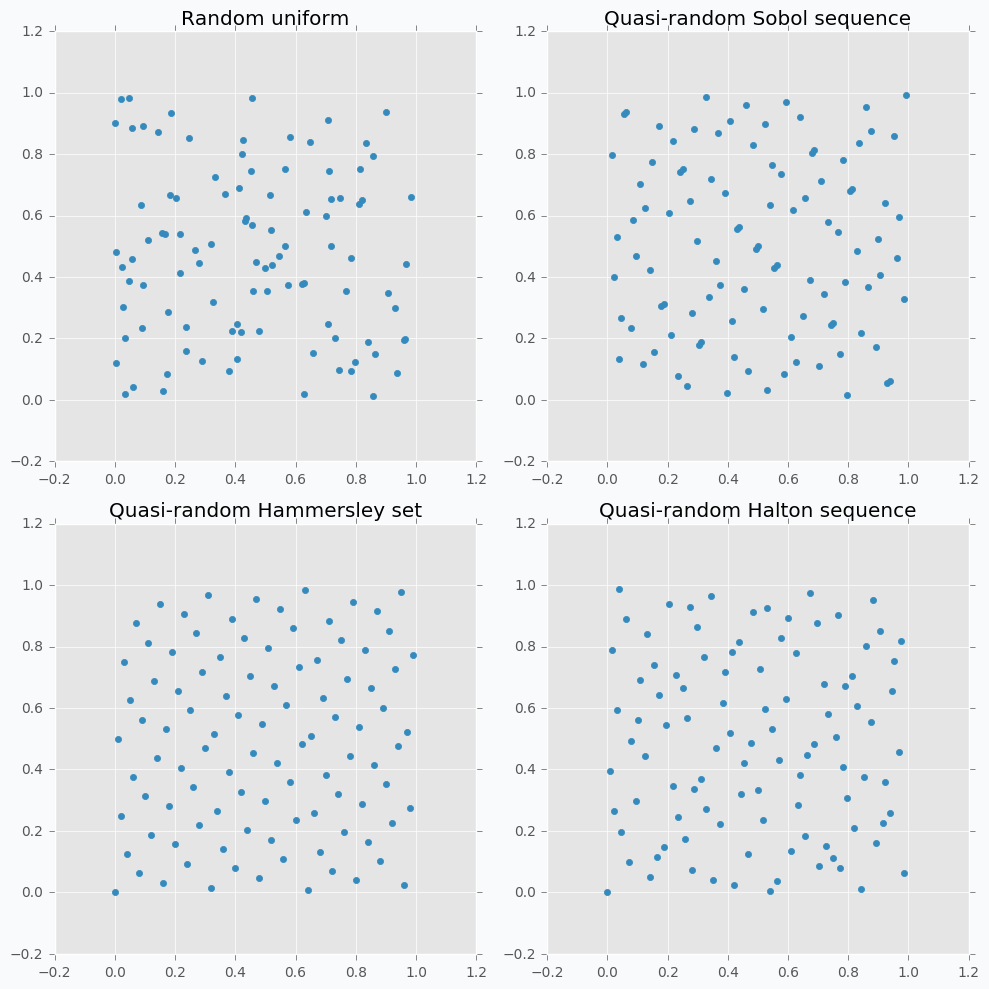

There are many different techniques for quasi-random sequences:

- Sobol sequence

- Hammersley set

- Halton sequence

- Poisson disk sampling

Let’s compare some of the mentioned methods with previously random sampled data points.

As we can see now sampled points spread out through the parameter space more uniformly. One disadvantage of these methods is that not all of them can provide you good results for the higher dimensions. For instance, Halton sequence and Hammersley set do not work well for dimension bigger than 10 [7].

Even though we improved hyperparameter optimization algorithm it still is not suitable for large neural networks.

But before we move on to more complicated methods I want to focus on parameter hand-tuning.

Hand-tuning

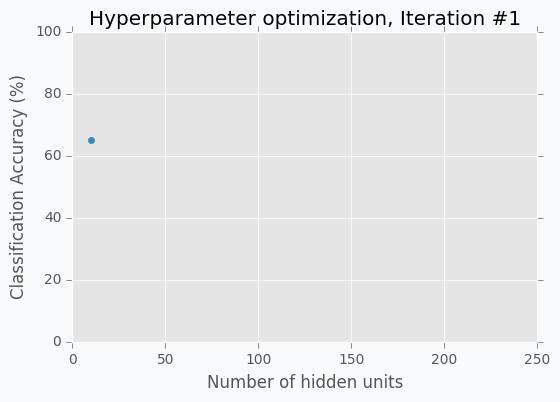

Let’s start with an example. Imagine that we want to select the best number of units in the hidden layer (we set up just one hyperparameter for simplicity). The simplest thing is to try different values and select the best one. Let’s say we set up 10 units for the hidden layer and train the network. After the training, we check the accuracy for the validation dataset and it turns out that we classified 65% of the samples correctly.

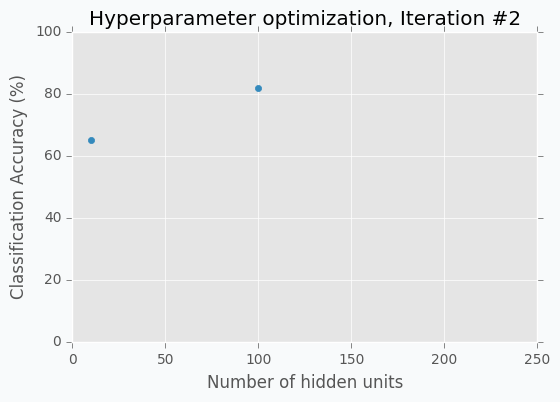

The accuracy is low, so it’s intuitive to think that we need more units in a hidden layer. Let’s increase the number of units and check the improvement. But, by how many should we increase the number of units? Will small changes make a significant effect on the prediction accuracy? Would it be a good step to set up a number of hidden units equal to 12? Probably not. So let’s go further and explore parameters from the next order of magnitude. We can set up a number of hidden units equal to 100.

For the 100 hidden units, we got prediction accuracy equal to 82% which is a great improvement compared to 65%. Two points in the figure above show us that by increasing number of hidden units we increase the accuracy. We can proceed using the same strategy and train network with 200 hidden units.

After the third iteration, our prediction accuracy is 84%. We’ve increased the number of units by a factor of two and got only 2% of improvement.

We can keep going, but I think judging by this example it is clear that human can select parameters better than Grid search or Random search algorithms. The main reason why is that we are able to learn from our previous mistakes. After each iteration, we memorize and analyze our previous results. This information gives us a much better way for selection of the next set of parameters. And even more than that. The more you work with neural networks the better intuition you develop for what and when to use.

Nevertheless, let’s get back to our optimization problem. How can we automate the process described above? One way of doing this is to apply a Bayesian Optimization.

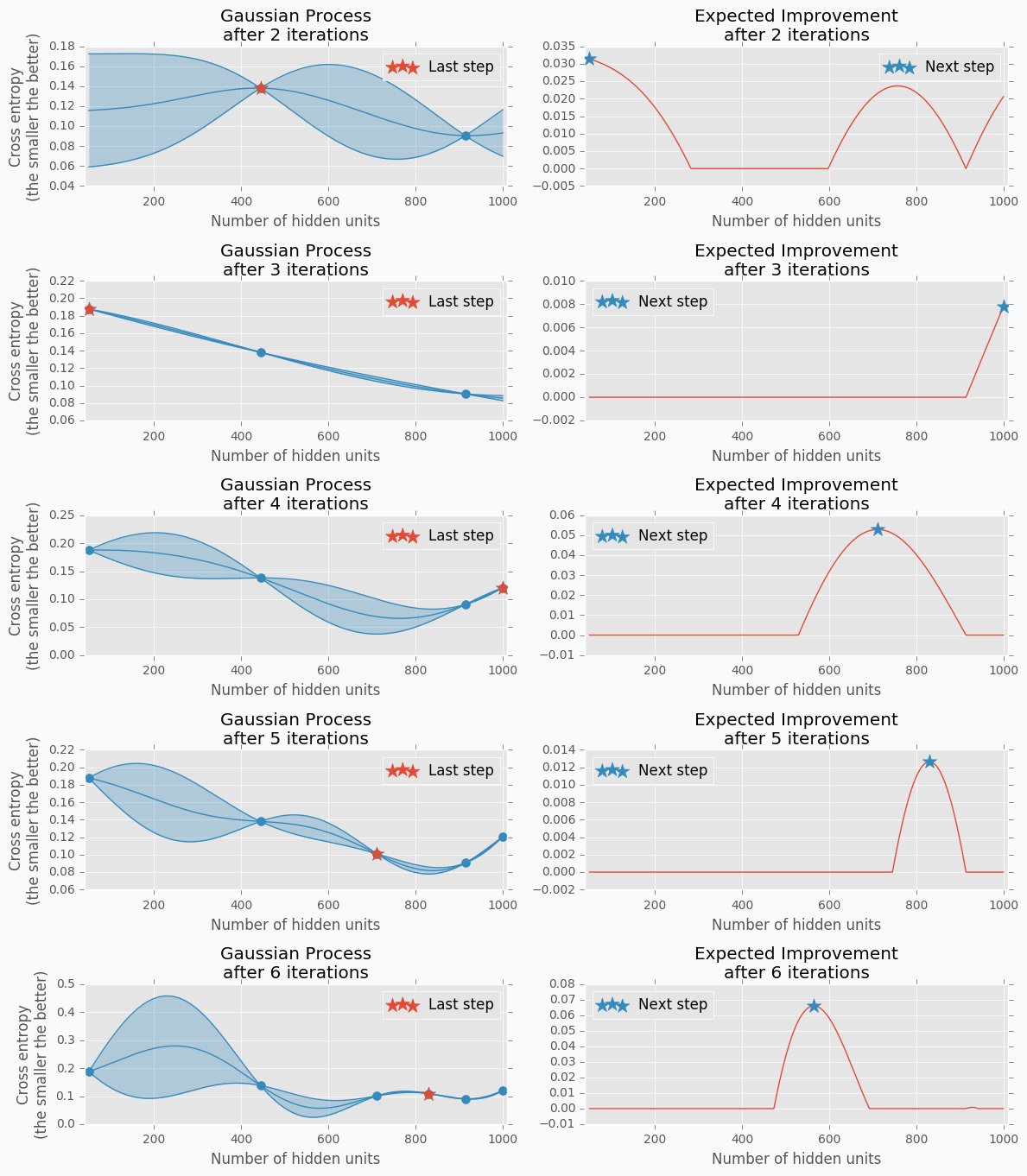

Bayesian Optimization

Bayesian optimization is a derivative-free optimization method. There are a few different algorithm for this type of optimization, but I was specifically interested in Gaussian Process with Acquisition Function. For some people it can resemble the method that we’ve described above in the Hand-tuning section. Gaussian Process uses a set of previously evaluated parameters and resulting accuracy to make an assumption about unobserved parameters. Acquisition Function using this information suggest the next set of parameters.

Gaussian Process

The idea behind Gaussian Process is that for every input \(x\) we have output \(y = f(x)\), where \(f\) is a stochastic function. This function samples output from a gaussian distribution. Also, we can say that each input \(x\) has associated gaussian distribution. Which means that for each input \(x\) gaussian process has defined mean \(\mu\) and standard deviation \(\sigma\) for some gaussian distribution.

Gaussian Process is a generalization of Multivariate Gaussian Distribution. Multivariate Gaussian Distribution is defined by mean vector and covariance matrix, while Gaussian Process is defined by mean function and covariance function. Basically, a function is an infinite vector. Also, we can say that Multivariate Gaussian Distribution is a Gaussian Process for the functions with a discrete number of possible inputs.

I always like to have some picture that shows me a visual description of an algorithm. One of such visualizations of the Gaussian Process I found in the Introduction to Gaussian Process slides [3].

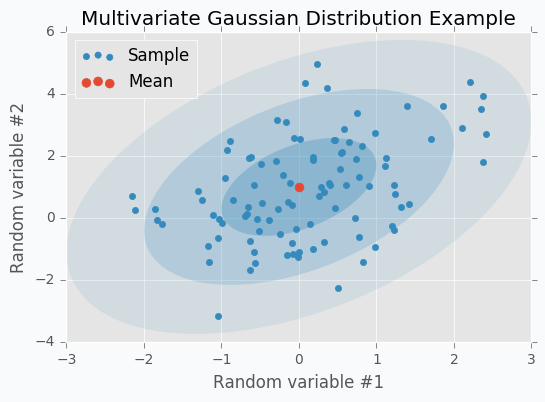

Let’s check some Multivariate Gaussian Distribution defined by mean vector \(\mu\)

and covariance matrix \(\Sigma\)

We can sample 100 points from this distribution and make a scatter plot.

Another way to visualize these samples might be Parallel Coordinates.

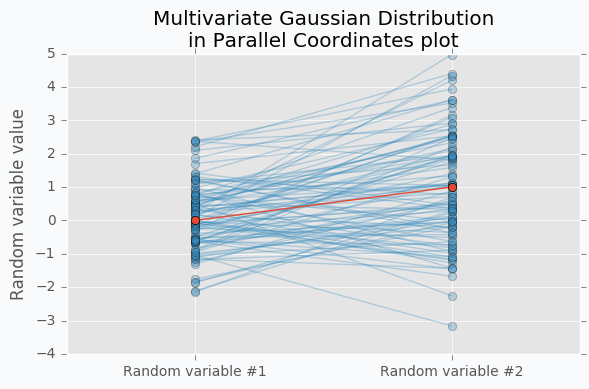

You should understand that lines that connect points are just an imaginary relations between each coordinate. There is nothing in between Random variable #1 and Random variable #2.

An interesting thing happens when we increase the number of samples.

Now we can see that lines form a smooth shape. This shape defines a correlation between two random variables. If it’s very narrow in the middle then there is a negative correlation between two random variables.

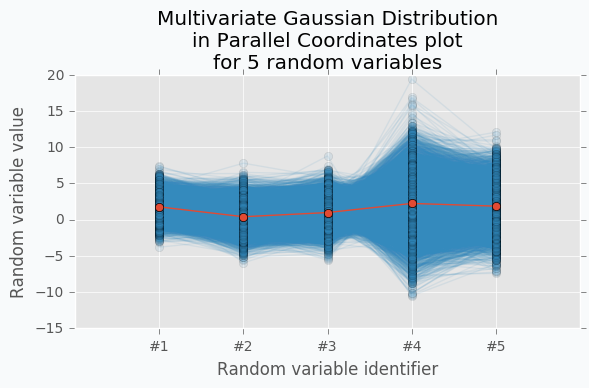

With scatter plot we are limited to numbers of dimensions that we can visualize, but with Parallel Coordinates we can add more dimensions. Let’s define new Multivariate Gaussian Distribution using 5 random variables.

With more variables, it looks more like a function. We can increase the number of dimensions and still be able to visualize Multivariate Gaussian Distribution. The more dimensions we add the more it looks like a set of functions sampled from the Gaussian Process. But in case of Gaussian Process number of dimensions should be infinite.

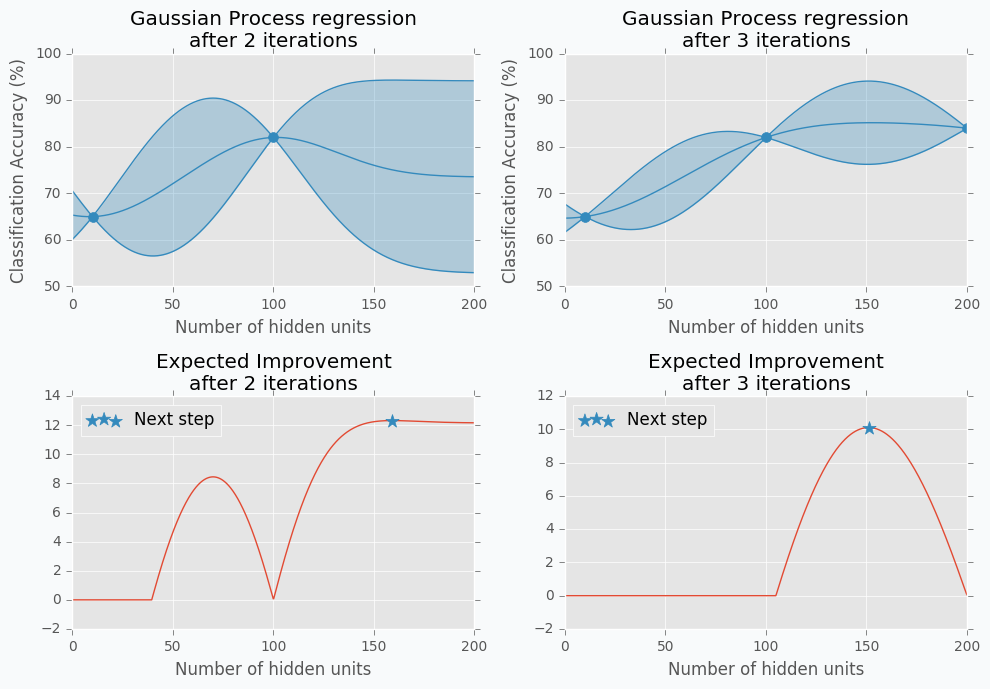

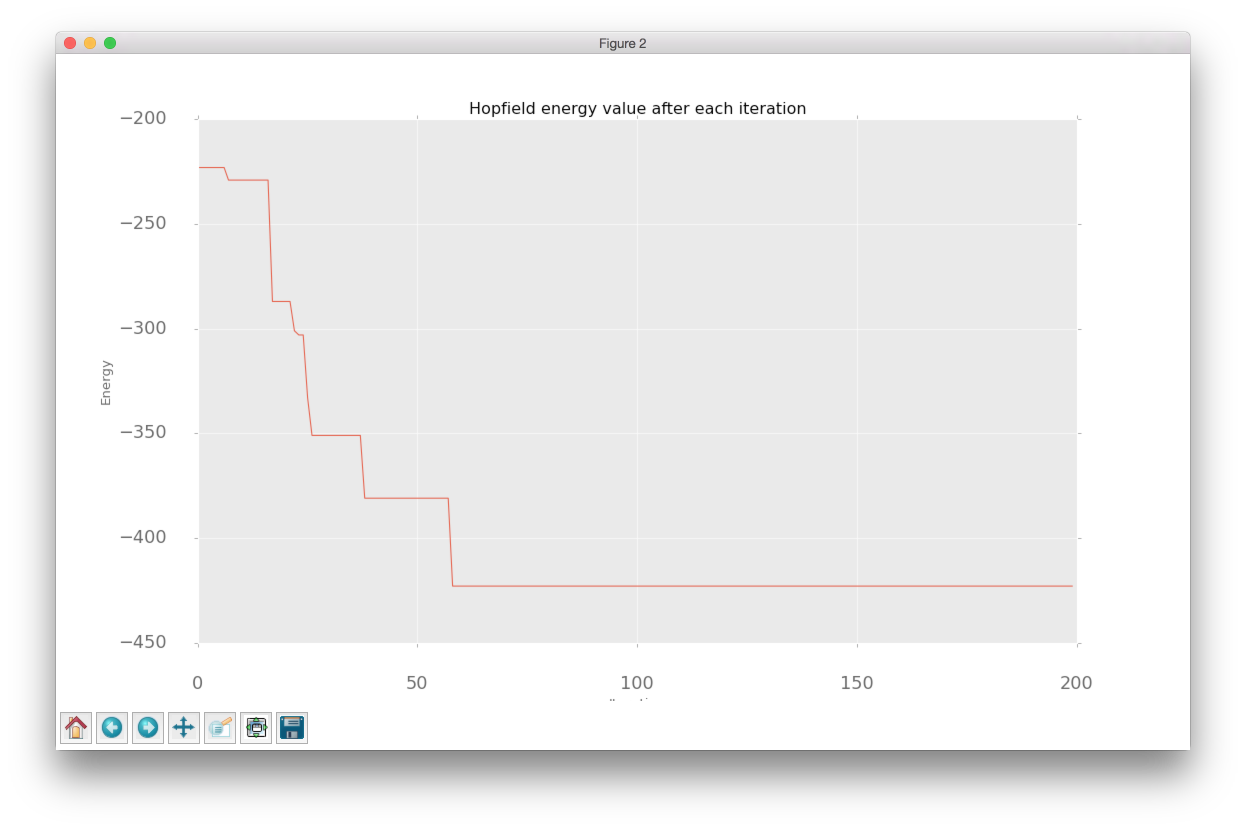

Let’s get data from the Hand-tuning section (the one where with 10 hidden units we got 65% of accuracy). Using this data we can train Gaussian Process and predict mean and standard deviation for each point \(x\).

The blue region defines 95% confidence interval for each point \(x\). It’s easy to see that the further we go from the observed samples the wider confidence interval becomes which is a logical conclusion. The opposite is true as well. Very similar to the logic that a person uses to select next set of parameters.

From the plot, it looks like observed data points doesn’t have any variance. In fact, the variance is not zero, it’s just really tiny. That’s because our previous Gaussian Process configuration is expecting that our prediction was obtained from a deterministic function which is not true for most neural networks. To fix it we can change the parameter for the Gaussian Process that defines the amount of noise in observed variables. This trick will not only give us a prediction that is less certain but also a mean of the number of hidden units that won’t go through the observed data points.

Acquisition Function

Acquisition Function defines the set of parameter for our next step. There are many different functions [1] that can help us calculate the best value for the next step. One of the most common is Expected Improvement. There are two ways to compute it. In case if we are trying to find minimum we can use this formula.

where \(y_{min}\) is the minimum observed value \(y\) and \(y_{lowest\ expected}\) lowest possible value from the confidence interval associated with each possible value \(x\).

In our case, we are trying to find the maximum value. With the small modifications, we can change last formula in the way that will identify Expected Improvement for the maximum value.

where \(y_{max}\) is the maximum observed value and \(y_{highest\ expected}\) highest possible value from the confidence interval associated with each possible value \(x\).

Here is an output for each point \(x\) for the Expected Improvement function.

Disadvantages of GP with EI

There are a few disadvantages related to the Gaussian Process with Expected Improvement.

- It doesn’t work well for categorical variables. In case if neural networks it can be a type of activation function.

- GP with EI selects new set of parameters based on the best observation. Neural Network usually involves randomization (like weight initialization and dropout) during the training process which influences a final score. Running neural network with the same parameters can lead to different scores. Which means that our best score can be just lucky output for the specific set of parameters.

- It can be difficult to select right hyperparameters for Gaussian Process. Gaussian Process has lots of different kernel types. In addition you can construct more complicated kernels using simple kernels as a building block.

- It works slower when number of hyperparameters increases. That’s an issue when you deal with a huge number of parameters.

Tree-structured Parzen Estimators (TPE)

Overview

Tree-structured Parzen Estimators (TPE) fixes disadvantages of the Gaussian Process. Each iteration TPE collects new observation and at the end of the iteration, the algorithm decides which set of parameters it should try next. The main idea is similar, but an algorithm is completely different

At the very beginning, we need to define a prior distribution for out hyperparameters. By default, they can be all uniformly distributed, but it’s possible to associate any hyperparameter with some random unimodal distribution.

For the first few iterations, we need to warn up TPE algorithm. It means that we need to collect some data at first before we can apply TPE. The best and simplest way to do it is just to perform a few iterations of Random Search. A number of iterations for Random Search is a parameter defined by the user for the TPE algorithm.

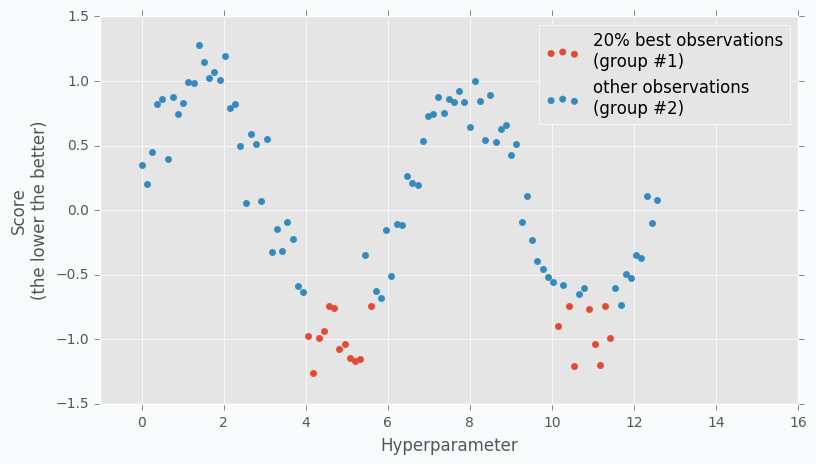

When we collected some data we can finally apply TPE. The next step is to divide collected observations into two groups. The first group contains observations that gave best scores after evaluation and the second one - all other observations. And the goal is to find a set of parameters that more likely to be in the first group and less likely to be in the second group. The fraction of the best observations is defined by the user as a parameter for the TPE algorithm. Typically, it’s 10-25% of observations.

As you can see we are no longer rely on the best observation. Instead, we use a distribution of the best observations. The more iterations we use during the Random Search the better distribution we have at the beginning.

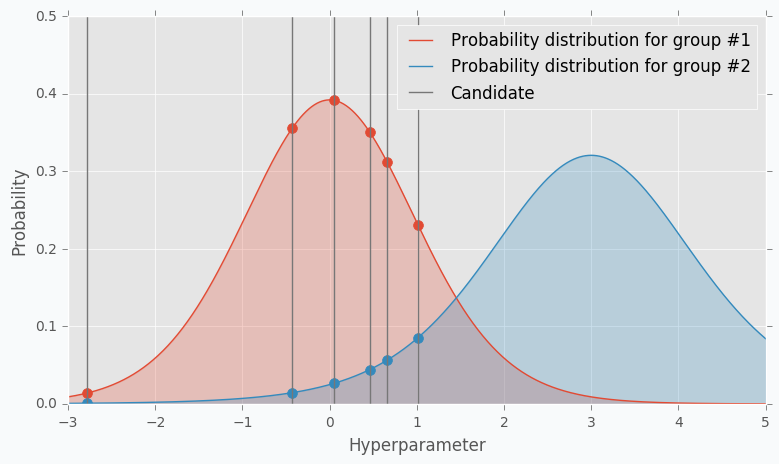

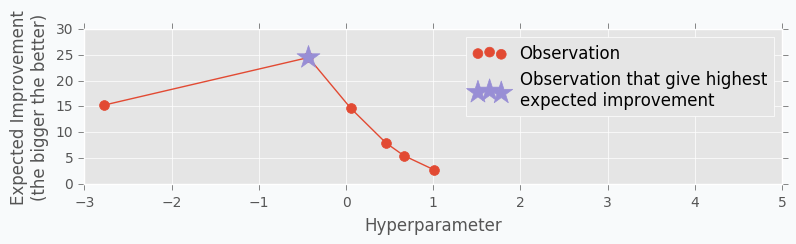

The next part of the TPE is to model likelihood probability for each of the two groups. This is the next big difference between Gaussian Process and TPE. For Gaussian Process we’ve modeled posterior probability instead of likelihood probability. Using the likelihood probability from the first group (the one that contains best observations) we sample the bunch of candidates. From the sampled candidates we try to find a candidate that more likely to be in the first group and less likely to be in the second one. The following formula defines Expected Improvement per each candidate.

Where \(l(x)\) is a probability being in the first group and \(g(x)\) is a probability being in the second group.

Here is an example. Let’s say we have predefined distribution for both groups. From the group #1, we sample 6 candidates. And for each, we calculate Expected Improvement. A parameter that has the highest improvement is the one that we will use for the next iteration.

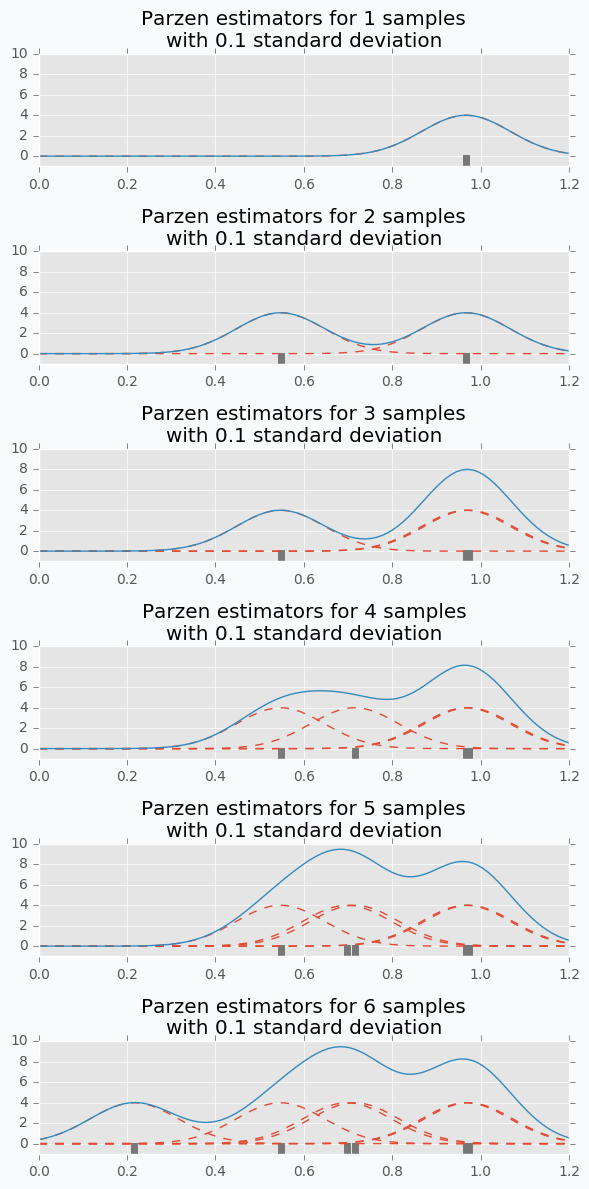

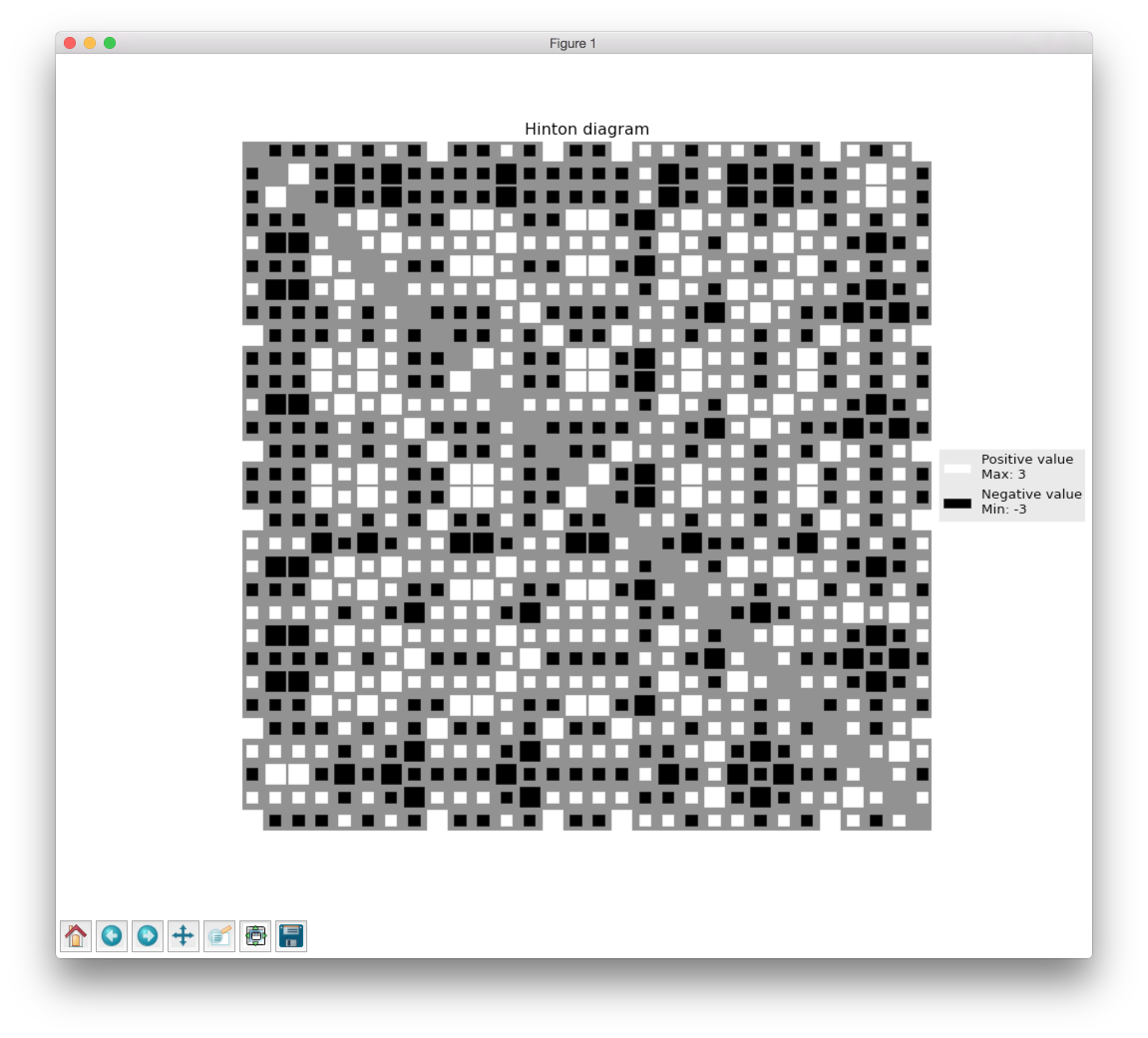

In the example, I’ve used t-distributions, but in TPE distribution models using parzen-window density estimators. The main idea is that each sample defines gaussian distribution with specified mean (value of the hyperparameter) and standard deviation. Then all these points stacks together and normalized to assure that output is Probability Density Function (PDF). That’s why Parzen estimators appears in the name of the algorithm.

And the tree-structured means that parameter space defines in a form of a tree. Later we will try to find the best number of layers for the network. In our case, we will try to decide whether it’s better to use one or two hidden layers. In case if we use two hidden layers we should define the number of hidden units for the first and second layer independently. If we use one hidden layer we don’t need to define the number of hidden units for the second hidden layer, because it doesn’t exist for the specified set of parameter. Basically, it means that a number of hidden units in the second hidden layer depends on the number of hidden layers. Which means that parameters have tree-structured dependencies.

Hyperparameter optimization for MNIST dataset

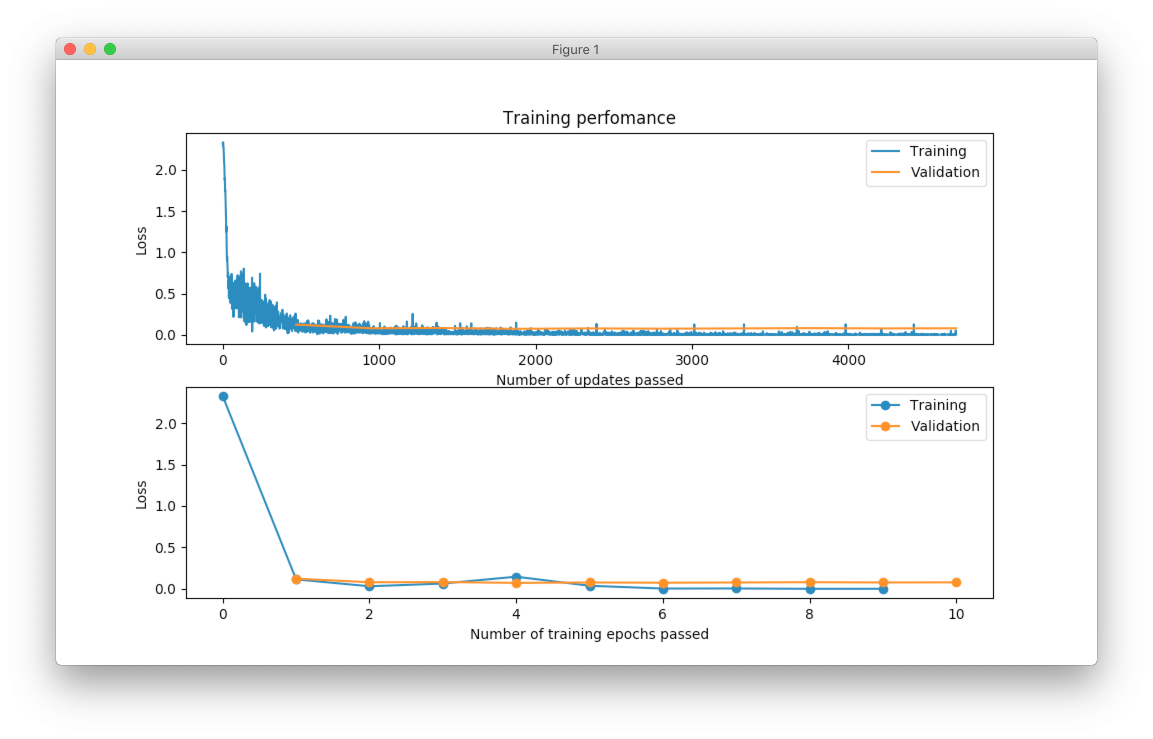

Let’s make an example. We’re going to use MNIST dataset.

import numpy as np

from sklearn import datasets, preprocessing

from sklearn.model_selection import train_test_split

X, y = datasets.fetch_openml('mnist_784', version=1, return_X_y=True)

target_scaler = preprocessing.OneHotEncoder(sparse=False, categories='auto')

y = target_scaler.fit_transform(y.reshape(-1, 1))

X /= 255.

X -= X.mean(axis=0)

x_train, x_test, y_train, y_test = train_test_split(

X.astype(np.float32),

y.astype(np.float32),

test_size=(1 / 7.)

)

For hyperparameter selection, I’m going to use hyperopt library. It has implemented TPE algorithm.

The hyperopt library gives the ability to define a prior distribution for each parameter. In the table below you can find information about parameters that we are going to tune.

| Parameter name | Distribution | Values |

|---|---|---|

| Step size | Log-uniform | \(x \in [0.01, 0.5]\) |

| Batch size | Log-uniform integer | \(x \in [16, 512]\) |

| Activation function | Categorical | \(x \in \{Relu, PRelu, Elu, Sigmoid, Tanh\}\) |

| Number of hidden layers | Categorical | \(x \in \{1, 2\}\) |

| Number of units in the first layer | Uniform integer | \(x \in [50, 1000]\) |

| Number of units in the second layer (In case if it defined) | Uniform integer | \(x \in [50, 1000]\) |

| Dropout layer | Uniform | \(x \in [0, 0.5]\) |

Here is one way to define our parameters in hyperopt.

import numpy as np

from hyperopt import hp

def uniform_int(name, lower, upper):

# `quniform` returns:

# round(uniform(low, high) / q) * q

return hp.quniform(name, lower, upper, q=1)

def loguniform_int(name, lower, upper):

# Do not forget to make a logarithm for the

# lower and upper bounds.

return hp.qloguniform(name, np.log(lower), np.log(upper), q=1)

parameter_space = {

'step': hp.uniform('step', 0.01, 0.5),

'layers': hp.choice('layers', [{

'n_layers': 1,

'n_units_layer': [

uniform_int('n_units_layer_11', 50, 500),

],

}, {

'n_layers': 2,

'n_units_layer': [

uniform_int('n_units_layer_21', 50, 500),

uniform_int('n_units_layer_22', 50, 500),

],

}]),

'act_func_type': hp.choice('act_func_type', [

layers.Relu,

layers.PRelu,

layers.Elu,

layers.Tanh,

layers.Sigmoid

]),

'dropout': hp.uniform('dropout', 0, 0.5),

'batch_size': loguniform_int('batch_size', 16, 512),

}

I won’t get into details. I think that definitions are pretty clear from the code. In case if you want to learn more about hyperopt parameter space initialization you can check this link.

Next we need to construct a function that we want to minimize. In our case function should train network using training dataset and return cross entropy error for validation dataset.

from pprint import pprint

def train_network(parameters):

print("Parameters:")

pprint(parameters)

print()

First of all, in the training function, we need to extract our parameter.

step = parameters['step']

batch_size = int(parameters['batch_size'])

proba = parameters['dropout']

activation_layer = parameters['act_func_type']

layer_sizes = [int(n) for n in parameters['layers']['n_units_layer']]

Note that some of the parameters I converted to the integer. The problem is that hyperopt returns float types and we need to convert them.

Next, we need to construct network based on the presented information. In our case, we use only one or two hidden layers, but it can be any arbitrary number of layers.

from neupy import layers

network = layers.Input(784)

for layer_size in layer_sizes:

network = network >> activation_layer(layer_size)

network = network >> layers.Dropout(proba) >> layers.Softmax(10)

To learn more about layers in NeuPy you should check documentation.

After that, we can define training algorithm for the network.

from neupy import algorithms

from neupy.exceptions import StopTraining

def on_epoch_end(network):

if network.training_errors[-1] > 10:

raise StopTraining("Training was interrupted. Error is to high.")

mnet = algorithms.RMSProp(

network,

batch_size=batch_size,

step=step,

loss='categorical_crossentropy',

shuffle_data=True,

signals=on_epoch_end,

)

All settings should be clear from the code. You can check RMSProp documentation to find more information about its input parameters. In addition, I’ve added a simple rule that interrupts training when the error is too high. This is an example of a simple rule that can be changed.

Now we can train our network.

mnet.train(x_train, y_train, epochs=50)

And at the end of the function, we can check some information about the training progress.

score = mnet.score(x_test, y_test)

y_predicted = mnet.predict(x_test).argmax(axis=1)

accuracy = metrics.accuracy_score(y_test.argmax(axis=1), y_predicted)

print("Final score: {}".format(score))

print("Accuracy: {:.2%}".format(accuracy))

return score

You can see that I’ve used two evaluation metrics. First one is cross-entropy. NeuPy uses it as a validation error function when we call the score method. The second one is just a prediction accuracy.

And finally, we run hyperparameter optimization.

import hyperopt

from functools import partial

# Object stores all information about each trial.

# Also, it stores information about the best trial.

trials = hyperopt.Trials()

tpe = partial(

hyperopt.tpe.suggest,

# Sample 1000 candidate and select candidate that

# has highest Expected Improvement (EI)

n_EI_candidates=1000,

# Use 20% of best observations to estimate next

# set of parameters

gamma=0.2,

# First 20 trials are going to be random

n_startup_jobs=20,

)

hyperopt.fmin(

train_network,

trials=trials,

space=parameter_space,

# Set up TPE for hyperparameter optimization

algo=tpe,

# Maximum number of iterations. Basically it trains at

# most 200 networks before selecting the best one.

max_evals=200,

)

And after all trials, we can check the best one in the trials.best_trial attribute.

Disadvantages of TPE

On of the biggest disadvantages of this algorithm is that it selects parameters independently from each other. For instance, there is a clear relation between regularization and number of training epoch parameters. With regularization, we usually can train network for more epochs and with more epochs we can achieve better results. On the other hand without regularization, many epochs can be a bad choice because network starts overfitting and validation error increases. Without taking into account the state of the regularization variable each next choice for the number of epochs can look arbitrary.

It’s good in case if you now that some variables have relations. To overcome problem from the previous example you can construct two different choices for epochs. The first one will enable regularization and selects a number of epochs from the \([500, 1000]\) range. And the second one without regularization and selects number of epochs from the \([10, 200]\) range.

hp.choice('training_parameters', [

{

'regularization': True,

'n_epochs': hp.quniform('n_epochs', 500, 1000, q=1),

}, {

'regularization': False,

'n_epochs': hp.quniform('n_epochs', 20, 300, q=1),

},

])

Summary

The Bayesian Optimization and TPE algorithms show great improvement over the classic hyperparameter optimization methods. They allow to learn from the training history and give better and better estimations for the next set of parameters. But it still takes lots of time to apply these algorithms. It’s great if you have an access to multiple machines and you can parallel parameter tuning procedure [4], but usually, it’s not an option. Sometimes it’s better just to avoid hyperparameter optimization. In case if you just try to build a network for trivial problems like image classification it’s better to use existed architectures with pre-trained parameters like VGG19 or ResNet.

For unique problems that don’t have pre-trained networks the classic and simple hand-tuning is a great way to start. A few iterations can give you a good architecture which won’t be the state-of-the-art but should give you satisfying result with a minimum of problems. In case if accuracy does not suffice your needs you can always boost your performance getting more data or developing ensembles with different models.

Source Code

All source code is available on GitHub in the iPython notebook. It includes all visualizations and hyperparameter selection algorithms.

References

| [1] | Bayesian Optimization and Acquisition Functions from http://www.cse.wustl.edu/~garnett/cse515t/files/lecture_notes/12.pdf |

| [2] | Gaussian Processes in Machine Learning from http://mlg.eng.cam.ac.uk/pub/pdf/Ras04.pdf |

| [3] | Slides: Introduction to Gaussian Process from https://www.cs.toronto.edu/~hinton/csc2515/notes/gp_slides_fall08.pdf |

| [4] | Preliminary Evaluation of Hyperopt Algorithms on HPOLib from http://compneuro.uwaterloo.ca/files/publications/bergstra.2014.pdf |

| [5] | Algorithms for Hyper-Parameter Optimization from http://papers.nips.cc/paper/4443-algorithms-for-hyper-parameter-optimization.pdf |

| [6] | Slides: Pattern Recognition, Lecture 6 from http://www.csd.uwo.ca/~olga/Courses/CS434a_541a/Lecture6.pdf |

| [7] | Low-discrepancy sampling methods from http://planning.cs.uiuc.edu/node210.html |

| [8] | Parzen-Window Density Estimation from https://www.cs.utah.edu/~suyash/Dissertation_html/node11.html |

Image classification, MNIST digits

This short tutorial shows how to build and train simple network for digit classification in NeuPy.

Data preparation

Data can be loaded in different ways. I used scikit-learn to fetch the MNIST dataset.

>>> from sklearn import datasets

>>> X, y = datasets.fetch_openml('mnist_784', version=1, return_X_y=True)

Now that we have the data we need to confirm that we have expected number of samples.

>>> X.shape

(70000, 784)

>>> y.shape

(70000,)

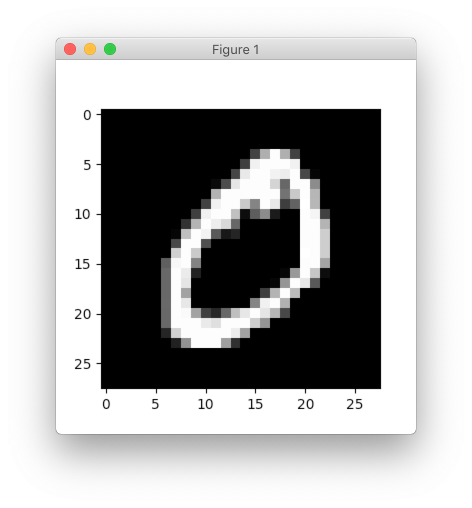

Every data sample has 784 features and they can be reshaped into 28x28 image.

>>> import matplotlib.pyplot as plt

>>> plt.imshow(X[0].reshape((28, 28)), cmap='gray')

>>> plt.show()

In this tutorial, we will use each image as a vector so we won’t need to reshape it to its original size. The only thing that we need to do is to rescale image values. Rescaling images will help network to converge faster.

>>> X = X.astype(np.float32)

>>> X /= 255.

>>> X -= X.mean(axis=0)

Notice the way division and subtraction are specified. In this way, we make update directly on the X matrix without copying it. It can be validated with simple example.

>>> import numpy as np

>>> A = np.random.random((100, 10))

>>> id(A) # numbers will be different between runs

4486892960

>>>

>>> A -= 3

>>> id(A) # object ID didn't change

4486892960

>>>

>>> A = A - 3

>>> id(A) # and now it's different, because it's different object

4602409968

After last update for matrix A we got different identifier for the object, which means that it got copied.

In case of the in-place updates, we don’t waste memory. Current dataset is relatively small and there is no memory deficiency, but for larger datasets it might make a big difference.

There is one more processing step that we need to do before we can train our network. Let’s take a look into target classes.

>>> import random

>>> random.sample(y.astype('int').tolist(), 10)

[9, 0, 9, 7, 2, 2, 3, 0, 0, 8]

All the numbers that we have are specified as integers. For our problem we want network to learn visual representation of the numbers. We cannot use them as integers, because it will create problems during the training. Basically, with the integer definition we’re implying that number 1 visually more similar to 0 than to number 7. It happens only because difference between 1 and 0 smaller than difference between 1 and 7. In order to avoid making any type of assumptions we will use one-hot encoding technique.

>>> from sklearn.preprocessing import OneHotEncoder

>>> encoder = OneHotEncoder(sparse=False)

>>> y = encoder.fit_transform(y.reshape(-1, 1))

>>> y.shape

(70000, 10)